最新要闻

- 当前信息:美国男子承认掰断兵马俑手指并偷走 最严只用服刑2年

- 速递!20个环球飞车同时炸场!小刀电动车通过暴力测试:比杂技还好看

- 谷歌亚马逊提供丰厚补偿鼓励离职:悬赏一年薪水

- 当前速看:女子看手机下楼梯踩空导致脸着地 经检查脸部多处擦伤

- 【全球热闻】海尔卖成2022全球第一家电品牌!韩国不愿意了:声称LG才是老大

- 视点!锂价跌破25万“生死线”,锂矿停产挺价?宜春锂业三巨头澄清,传闻不靠谱!

- 每日简讯:FIFA国家队排名:国足下降至81位 美加墨世界杯出线有希望

- 还有谁!比亚迪ATTO 3连续5个月蝉联以色列全系单车销冠

- 环球热点!网友点赞小米之家:线下免费送父母智能手机使用指南 还教用微信

- 全球快资讯丨苹果虚拟现实头显设备即将到来!果粉不感兴趣

- 【天天报资讯】不妙!国内油价预计大涨0.4元/升 4月17日起调整

- 每日聚焦:为节约开支 英国打算用驳船“装”移民

- 每日看点!首搭长城Hi4电四驱 哈弗枭龙MAX官图发布:博主直言价格合理必卖爆

- 大疆Mavic 3 Pro无人机曝光:升级三枚摄镜头 奔着2万元去了

- 上百人进山挖黄金引网友围观!官方回应:挖的是昆虫 被称“土黄金”

- 世界即时看!马斯克74岁超模妈妈参观特斯拉上海超级工厂:夸赞制造完美、质量过硬

广告

手机

iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

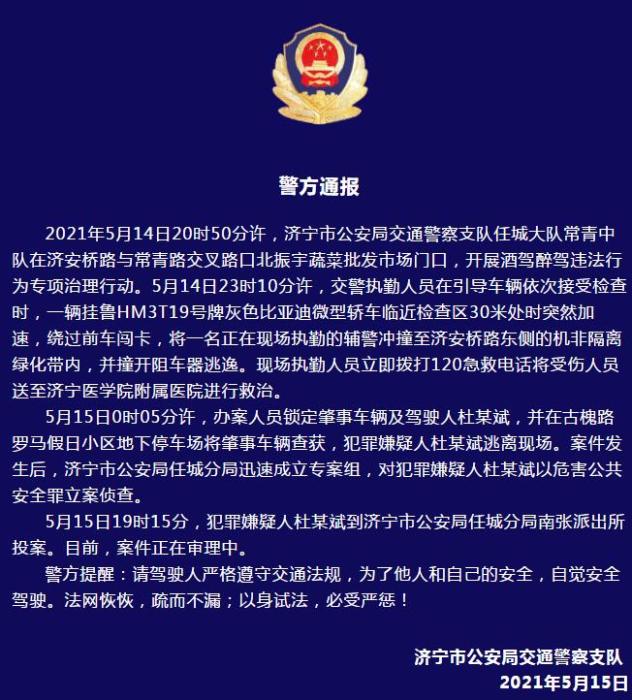

警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

- 警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- 男子被关545天申国赔:获赔18万多 驳回精神抚慰金

- 3天内26名本土感染者,辽宁确诊人数已超安徽

- 广西柳州一男子因纠纷杀害三人后自首

- 洱海坠机4名机组人员被批准为烈士 数千干部群众悼念

家电

当前热议!在线商城爬虫 带爬取记录 以11TREET 为例

整体思路

第一步 抓取全部的列表页链接

第二步 抓取每个列表页的商品总数,页数

(资料图片仅供参考)

(资料图片仅供参考)

第三步 单个列表页 进行分业 抓取商品价格

第四步 单个列表页抓取完成后 输出商品数据并在本地文件记录本次抓取

最后一步 合并各个列页表抓取的商品数据

第一步

爬取的网站,获得分类信息

https://global.11st.co.kr/glb/

这里分类是动态加载的,需要用selenium + chromedriver

代码如下

import requests,random,os,math,time,re,pandas as pd,numpy as npfrom bs4 import BeautifulSoupfrom selenium import webdriver#chomedriver 地址CHROME_DRIVER_PATH = "/Users/xxxx/Downloads/chromedriver"#爬取动态界面def get_dynamic_html(site_url): print("开始加载",site_url,"动态页面") chrome_options = webdriver.ChromeOptions() #ban sandbox chrome_options.add_argument("--no-sandbox") chrome_options.add_argument("--disable-dev-shm-usage") #use headless #chrome_options.add_argument("--headless") chrome_options.add_argument("--disable-gpu") chrome_options.add_argument("--ignore-ssl-errors") driver = webdriver.Chrome(executable_path=CHROME_DRIVER_PATH,chrome_options=chrome_options) #print("dynamic laod web is", site_url) driver.set_page_load_timeout(100) #driver.set_script_timeout(100) try: driver.get(site_url) except Exception as e: driver.execute_script("window.stop()") # 超出时间则不加载 print(e, "dynamic web load timeout") data = driver.page_source soup = BeautifulSoup(data, "html.parser") try: driver.quit() except: pass return soup#获得列表页链接def get_page_url_list(cate_path): cate_url_list = [] print("开始爬取") page_url = "https://global.11st.co.kr/glb/en/browsing/Category.tmall?method=getCategory2Depth&dispCtgrNo=1001819#" soup = get_dynamic_html(page_url) print(soup.prettify()) one_cate_ul_list = soup.select("#lnbMenu > ul > li") for i in range(0, len(one_cate_ul_list)): one_cate_ul = one_cate_ul_list[i] one_cate_name = one_cate_ul.select("a")[0].text one_cate_url = one_cate_ul.select("a")[0].attrs["href"] two_cate_ul_list = one_cate_ul.select("ul.list_category > li") for two_cate_ul in two_cate_ul_list: two_cate_name = two_cate_ul.select("a")[0].text two_cate_url = two_cate_ul.select("a")[0].attrs["href"] three_cate_ul_list = two_cate_ul.select("li .list_sub_cate > li") for three_cate_ul in three_cate_ul_list: three_cate_name = three_cate_ul.select("a")[0].text three_cate_url = three_cate_ul.select("a")[0].attrs["href"] cate_obj = { "brand": "global.11st.co", "site": "kr", "one_cate_name": one_cate_name, "one_cate_url": one_cate_url, "two_cate_name": two_cate_name, "two_cate_url": two_cate_url, "three_cate_name": three_cate_name, "three_cate_url": three_cate_url, } cate_url_list.append(cate_obj) cate_url_df = pd.DataFrame(cate_url_list) cate_url_df.to_excel(cate_path, index=False)if __name__ == "__main__": #列表页链接存放位置 cate_excel_path = "/Users/xxxx/Downloads/11st_kr_page_list.xlsx" get_page_url_list(cate_excel_path)第二步

如图每个列表页可以看到总商品数量,每页展示40件商品,可以计算总页数

基于步骤一获得文件,去计算每个列表页的页数

#需要引入的包 都在步骤一#获得总数量 和 总页数def account_page_num(cate_path, reocrd_path): out_page_list = [] page_list_df = pd.read_excel(cate_path) for index, row in page_list_df.iterrows(): print(index, row) page_item = { "brand": row["brand"], "site": row["site"], "one_cate_name": row["one_cate_name"], "two_cate_name": row["two_cate_name"], "two_cate_url": row["two_cate_url"], "three_cate_name": row["three_cate_name"], "three_cate_url": row["three_cate_url"] } page_item["total_item_num"] = "not found tag" page_item["total_page_num"] = 0 page_item["per_page_num"] = 40 page_item["start_page_num"] = 0 soup = get_static_html(page_item["three_cate_url"]) total_num_tag_list = soup.select("ul.categ > li.active") if len(total_num_tag_list) > 0: total_num_tag = total_num_tag_list[0] tag_text = total_num_tag.text num_pattern = re.compile("\(([0-9 ,]+)\)") num_arr = num_pattern.findall(tag_text) if len(num_arr) > 0: page_item["total_item_num"] = int(num_arr[0].replace(",", "")) page_item["total_page_num"] = math.ceil(page_item["total_item_num"] / page_item["per_page_num"]) else: page_item["total_item_num"] = f"text error:{tag_text}" print(page_item) out_page_list.append(page_item) record_url_df = pd.DataFrame(out_page_list) record_url_df.to_excel(reocrd_path, index=False)if __name__ == "__main__": date_str = "2023-04-06" #爬虫记录 记录已经爬取的页数,以防中途爬取失败,不用从头开始爬,可接着爬 crawl_record_path = f"/Users/xxxx/Downloads/11st_kr_page_reocrd_{date_str}.xlsx" account_page_num(cate_excel_path, crawl_record_path)第三步,第四步

代码如下

#需要引入的包都在步骤1#获得静态的界面def get_static_html(site_url): print("开始加载", site_url, "页面") headers_list = [ "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/67.0.3396.79 Safari/537.36", "Mozilla/5.0 (Windows NT 6.1; WOW64; rv:34.0) Gecko/20100101 Firefox/34.0 ", "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.57.2 (KHTML, like Gecko) Version/5.1.7 Safari/534.57.2", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/68.0.3440.106 Safari/537.36" ] headers = { "user-agent": headers_list[random.randint(0,len(headers_list))-1], "Connection": "keep - alive" } try: resp = requests.get(site_url, headers=headers) except Exception as inst: print(inst) requests.packages.urllib3.disable_warnings() resp = requests.get(site_url, headers=headers,verify=False) soup = BeautifulSoup(resp.text, "html.parser") return soup#dateframe格式 输出为无url格式的exceldef obj_list_to_df_wihout_url(obj_df, out_path): conten_writer = pd.ExcelWriter(out_path, engine="xlsxwriter", options={"strings_to_urls": False}) obj_df.to_excel(conten_writer , index=False) conten_writer.close()#获取列表页的商品信息def info_from_page_list(index, page_item): #爬取最大列表数限制 max_limit = 250 #存放位置 three_cate_name = page_item["three_cate_name"].strip().replace(" ", "&").replace("/", "&") now_out_path = f"{crawl_tmp_dir}/{index}_{three_cate_name}.xlsx" total_page_num = page_item["total_page_num"] if page_item["total_page_num"] <= max_limit else max_limit finsh_page_num = page_item["finsh_page_num"] print(finsh_page_num, total_page_num) #如果从头开始 if finsh_page_num == 0 and not os.path.exists(now_out_path): out_goods_list = [] #接着上次爬取 else: already_obj_df = pd.read_excel(now_out_path) out_goods_list = np.array(already_obj_df).to_list() if finsh_page_num == total_page_num: print(f"{index} {page_item["three_cate_name"]} 抓取结束") for i in range(finsh_page_num, total_page_num): page_url = f"{page_item["three_cate_url"]}#pageNum%%{i + 1}" soup = get_static_html(page_url) info_tag_list = soup.select("ul.tt_listbox > li") for goods_tag in info_tag_list: info_item = page_item.copy() pattern_tag_3 = re.compile("products\/([0-9]+)") href_tag = goods_tag.select(".photo_wrap > a")[0] desc_tag = goods_tag.select(".list_info > .info_tit")[0] #feedback_tag = goods_tag.select(".list_info .sfc") #collect_tag = goods_tag.select(".list_info .def_likethis") price_tag = goods_tag.select(".list_price .dlr")[0] info_item["href"] = href_tag.attrs["href"] info_item["product_id"] = "" info_item["desc"] = desc_tag.text #info_item["feedback"] = feedback_tag.text #info_item["collect"] = collect_tag.text info_item["price_kr"] = int(price_tag.attrs["data-finalprice"]) info_item["price_us"] = round(info_item["price_kr"] * 0.0007959, 2) if info_item["href"] != "": id_arr = pattern_tag_3.findall(info_item["href"]) if len(id_arr) > 0: info_item["product_id"] = id_arr[0] out_goods_list.append(info_item) #每50页保存一次 if i == total_page_num - 1 or i % 50 == 0: print("开始保存") #临时保存 out_goods_df = pd.DataFrame(out_goods_list) obj_list_to_df_wihout_url(out_goods_df, now_out_path) print("更新记录") #更新记录 crawl_record_df = pd.read_excel(crawl_record_path) crawl_record_df.loc[index, "finsh_page_num"] = i + 1 print(crawl_record_df.loc[index, "finsh_page_num"]) obj_list_to_df_wihout_url(crawl_record_df, crawl_record_path)if __name__ == "__main__": date_str = "2023-04-06" #本次爬虫记录 crawl_record_path = f"/Users/xxx/Downloads/11st_kr_page_reocrd_{date_str}.xlsx" #临时存放爬取的商品数据目录 crawl_tmp_dir = f"/Users/xxx/Downloads/11st_kr_page_reocrd_{date_str}" if not os.path.exists(crawl_tmp_dir): os.mkdir(crawl_tmp_dir) crawl_record_df = pd.read_excel(crawl_record_path) new_recrod_list = [] for index, row in crawl_record_df.iterrows(): info_from_page_list(index, row)最后一步

合并临时存放商品数据的excel

crawl_tmp_dir

关键词:

-

-

-

-

天天简讯:用powershell开发跨平台动态网页

本例将为您展示: 在win,linux版powershellv7 3中,加载win,linux版asp net库。继而用纯powershell...

来源: 当前热议!在线商城爬虫 带爬取记录 以11TREET 为例

世界消息!AIRIOT赋能水务行业深度转型,打造智慧水务“四化建设”

焦点热议:常用Content-Type汇总

天天简讯:用powershell开发跨平台动态网页

当前信息:美国男子承认掰断兵马俑手指并偷走 最严只用服刑2年

速递!20个环球飞车同时炸场!小刀电动车通过暴力测试:比杂技还好看

谷歌亚马逊提供丰厚补偿鼓励离职:悬赏一年薪水

当前速看:女子看手机下楼梯踩空导致脸着地 经检查脸部多处擦伤

【全球热闻】海尔卖成2022全球第一家电品牌!韩国不愿意了:声称LG才是老大

世界视讯!记录-关于console你不知道的那些事

世界今头条!半天时间写完一个案例,循序渐进的掌握uni-app,使用uni-app完成一个简单项目——新闻列表

焦点速讯:【财经分析】多空因素交织博弈 债市尚无近忧仍有远虑

视点!锂价跌破25万“生死线”,锂矿停产挺价?宜春锂业三巨头澄清,传闻不靠谱!

每日简讯:FIFA国家队排名:国足下降至81位 美加墨世界杯出线有希望

还有谁!比亚迪ATTO 3连续5个月蝉联以色列全系单车销冠

环球热点!网友点赞小米之家:线下免费送父母智能手机使用指南 还教用微信

全球快资讯丨苹果虚拟现实头显设备即将到来!果粉不感兴趣

【天天报资讯】不妙!国内油价预计大涨0.4元/升 4月17日起调整

报道:Mysql LOAD DATA读取客户端任意文件

python中动态导入文件的方法

小程序容器助力企业小程序开放平台打造

每日动态!记录一次小程序中讨厌的拍照上传的优化

世界热讯:开心档之C++ 多态

每日聚焦:为节约开支 英国打算用驳船“装”移民

每日看点!首搭长城Hi4电四驱 哈弗枭龙MAX官图发布:博主直言价格合理必卖爆

大疆Mavic 3 Pro无人机曝光:升级三枚摄镜头 奔着2万元去了

上百人进山挖黄金引网友围观!官方回应:挖的是昆虫 被称“土黄金”

世界即时看!马斯克74岁超模妈妈参观特斯拉上海超级工厂:夸赞制造完美、质量过硬

革命性全彩电子纸问世:媲美最先进纸质印刷品 明年量产上市

即时:图解 SQL 执行顺序,通俗易懂!

网上说低代码的一大堆,JNPF凭什么可以火?

环球报道:不管车贵不贵,十万里程后,此3种零件该换就换,别省钱关乎生命

为蹭车位男子当街换车牌被罚5000:驾照十二分也没了

当前热文:苹果服务器再出故障:iCloud、iMessage崩了

世界今头条!曾传乐视靠《甄嬛传》活着 乐视将推85英寸甄嬛传限量电视

当前快播:徕卡超大杯首次进军海外!小米13 Ultra将全球上市

焦点速递!89元 小米米家电动牙刷T200C发布:Type-C充电 续航25天

当前热文:W1R3S

天天热讯:数位dp

今日看点:kill 进程时遇到的一件有意思的事情

焦点速讯:黑夜传说演员表_传说演员表

全球新动态:比尔·盖茨反击马斯克:暂停AI的发展并不能解决问题

每日报道:比亚迪海龙等4款超跑概念图亮相!你觉得哪款最帅?

环球讯息:长城汽车:一位新能源砖家 利润高可能是因为新能源卖的差

狠人!印度一男子爬火车摸25000伏高压电 瞬间成火球吓坏网友

重点聚焦!104MB缓对锐龙7 7800X3D核显无用:鸡血提升不存在

全球热资讯!SpringBoot中如何编写一个优雅的限流组件?

环球今热点:一文快速了解火山引擎 A/B 测试平台

小程序对接三方插件契约锁

【全球新视野】真的有那么好?试完深蓝SL03我明白了

诺基亚E72i手机什么时候上市的?诺基亚E72i手机参数

摩尔庄园怎么获得桑叶?摩尔庄园怎么收获农作物?

龙之谷白屏是怎么回事?龙之谷白屏怎么解决?

天天观点:四年创收20亿美元!微软XGP PC用户突破1500万

大神出手!安卓14/骁龙8 Gen2双双被破解:一键ROOT

南非一客机驾驶舱惊现剧毒眼镜蛇往人身上爬 飞行员神勇应对:平安降落

闲鱼曝光率突然下降怎么办?闲鱼曝光率怎么提高?

闲鱼对方被处置能回复消息吗?闲鱼对方被处置能正常发货吗?

全球热文:某公司技术经理媚上欺下,打工人应怼尽怼,嘤其鸣兮,求其友声!

焦点讯息:用上这几种.NET EF Core性能调优,查询性能飙升

全球今日讯!石家庄:医师资格考试报名材料即将发放

天天观热点:老司机不刹车?特斯拉潮州事故车主不服鉴定 车顶维权女车主:厂商应公开完整数据

世界聚焦:为救高烧幼童 高铁破例停车2分钟

【独家】你敢体验吗?菲律宾推出网红蟒蛇按摩服务:40元享受30分钟

AI抢饭碗成真!近500家企业用ChatGPT取代员工:有公司省超10万美元

世界头条:苏炳添回应手机从小米换成苹果:合约已到期 旧手机摔坏了

【世界快播报】K8S学习圣经6:资源控制+SpringCloud动态扩容原理和实操

环球热议:顶象受邀加入“数字政府网络安全产业联盟”

Blender插件:Muscle System

天天短讯!两单REIT业绩说明会首次在上海证券交易所成功举办

世界热消息:招商银行信用卡网银(中国邮政储蓄余额查询)

焦点精选!什么情况?马自达要给CX-50征集中文名:越境、行也、俊驰、览乐你投谁

全球即时看!工资六千的岗位面试了6轮!女生发视频吐槽

世界观点:TikTok回应英国政府巨额罚款:乐见罚款大幅度降低

李国庆称羡慕周鸿祎离婚:没争夺控制权 你就乐吧

嫦娥五号采集月壤立功:中国科学家刷新月球死亡时间

环球资讯:EasyMR 安全架构揭秘:如何管理 Hadoop 数据安全

小程序容器助力组装移动银行超级APP

【环球报资讯】GPS北斗卫星时钟服务器在飞机场内网中的应用

和讯个股快报:2023年04月06日 紫天科技(300280),该股K线呈现“乌云盖顶”形态

头条:24小时长效锁水 妮维雅男士精华露39.9元狂促:送洁面乳50gx2

车市“价格战”开打一月:新车销量未回暖 二手准新车被坑惨

速递!杨元庆:联想过去三年营收增长1100亿 PC业务还是第一

焦点快报!游客开车陷进沙滩:拖车开口要价5千 待会涨潮至少要5万

环球动态:最快6月2日早就能玩到!《暗黑4》全球解锁时间公开

世界视点!清明时节祭祖扫墓 “小三通”客运航线往来忙碌

每日短讯:全网最详细中英文ChatGPT-GPT-4示例文档-会议笔记文档智能转摘要从0到1快速入门——官网推荐的48种最佳应用场景(附python/node.

世界即时:Linux在游戏界的口碑树立

前端设计模式——MVC模式

福岛核电站1号机组底座受损严重!日本民众集会反对核污水排海

环球新消息丨特斯拉发布“宏图计划3”完整文件 10万亿美元改变地球

形似“摄魂怪” 澳大利亚悉尼惊现破片云:颜色漆黑、预示狂风暴雨

全球今热点:Redmi Note 12 Turbo好评率99%!卢伟冰:得到极大认可 把竞品甩在后面

快看点丨划时代产品!特斯拉新款小型电动汽车公布 目标销量4200万辆

世界速读:我2022年8月18月去医院做牙齿矫正,但是我只交了定金,后续也没拿到牙套

SpringBoot如何进行限流,老鸟们还可以这样玩!

环球微资讯!Podman Compose 新手指南

环球今热点:美国ADP就业数据不及预期 暗示劳动力需求降温

焦点快报!深赛格:融资净买入132.4万元,融资余额1.03亿元(04-04)

天天报道:中国为何未研制出ChatGPT?中科院包云岗:需要优秀技术团队、雄厚资金