最新要闻

- 环球热点评!5年前就已杀青 周星驰《美人鱼2》进入后期制作

- 谨慎升级 等了2个月的AMD新驱动疑似翻车:系统崩了

- 世界热议:央广网:“暴雪式”傲慢引众怒 或终将致其失去中国市场

- 要闻速递:中国移动:加速千兆网络全面普及 建成全球最大规模光网络

- 中国快递卷了15年:死死困住了一个50岁的老快递员

- 纽约黄金期货周三收跌1.1% 创五周来最低收盘价

- 1998年属虎的人2012年运程

- 今日聚焦!qq情侣网名 〈談情》**/|(說爰)ⅱ,

- 80年代的零食大全怀旧辣条_80年代的零食大全怀旧

- 世界微速讯:2023年春运收官:40天发送旅客近16亿人次 大涨50%

- 香港2022年暴力罪案同比下降7.9%

- 观天下!探险的好处辩论赛,正方_探险的好处

- 全球播报:AMD RX 7600S游戏本显卡首测:远不如RTX 3060!高端弃疗了

- 世界速讯:10道恋爱送命题灵魂拷问ChatGPT:它的回答让我陷入沉思

- 马斯克向往每周只工作80小时!网友:驴都不敢这么用

- 每日速读!中国内地特供!Intel i5-13490F处理器图赏

手机

iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

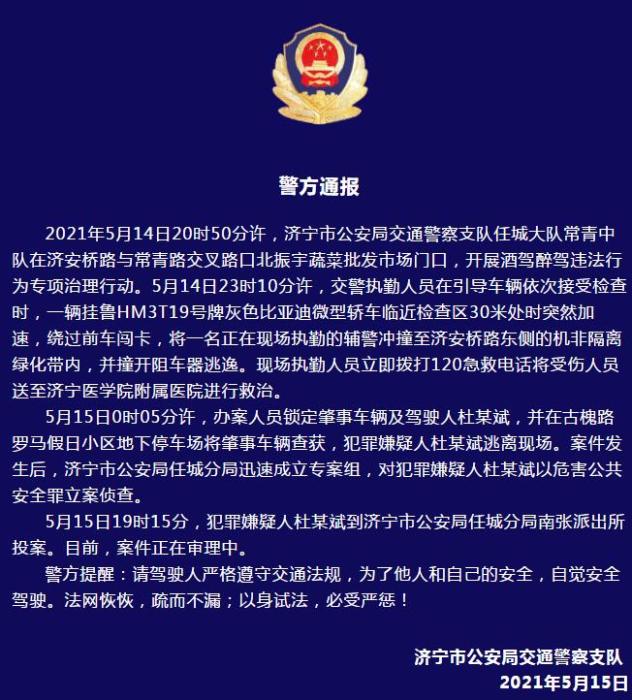

警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

- 警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- 男子被关545天申国赔:获赔18万多 驳回精神抚慰金

- 3天内26名本土感染者,辽宁确诊人数已超安徽

- 广西柳州一男子因纠纷杀害三人后自首

- 洱海坠机4名机组人员被批准为烈士 数千干部群众悼念

家电

CentOS7.9安装K8S高可用集群(三主三从)

服务器规划见下表(均为4核4G配置):

按上表准备好服务器后,对所有服务器节点的操作系统内核由3.10升级至5.4+(Haproxy、Keepalived和Ceph存储等需要用到),步骤如下:

#导入用于内核升级的yum源仓库ELRepo的秘钥rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org#启用ELRepo仓库yum源rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm#查看当前可供升级的内核版本yum --disablerepo="*" --enablerepo="elrepo-kernel" list available#安装长期稳定版本的内核(本例中为5.4.231-1.el7.elrepo)yum --enablerepo=elrepo-kernel install kernel-lt -y#设置GRUB的默认启动内核为刚刚新安装的内核版本(先备份,再修改)cp /etc/default/grub /etc/default/grub.bakvi /etc/default/grub

/etc/default/grub文件原来的内容如下:

(相关资料图)

(相关资料图)

GRUB_TIMEOUT=5GRUB_DISTRIBUTOR="$(sed "s, release .*$,,g" /etc/system-release)"GRUB_DEFAULT=savedGRUB_DISABLE_SUBMENU=trueGRUB_TERMINAL_OUTPUT="console"GRUB_CMDLINE_LINUX="crashkernel=auto rd.lvm.lv=centos_jumpserver/root rd.lvm.lv=centos_jumpserver/swap rhgb quiet"GRUB_DISABLE_RECOVERY="true"

将其的GRUB_DEFAULT=saved的值由 saved改为 0 即可(即 GRUB_DEFAULT=0)保存退出,接着执行以下命令:

#重新生成启动引导项配置文件,该命令会去读取/etc/default/grub内容grub2-mkconfig -o /boot/grub2/grub.cfg#重启系统reboot -h now

重启完成后,查看一下当前的操作系统及内核版本:

[root@master1 ~]# cat /etc/redhat-releaseCentOS Linux release 7.9.2009 (Core)[root@master1 ~]# uname -rsLinux 5.4.231-1.el7.elrepo.x86_64

更新一下yum源仓库CentOS-Base.repo的地址,配置阿里云镜像地址,用于加速后续某些组件的下载速度:

#先安装一下wget工具yum install -y wget#然后备份 CentOS-Base.repomv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.bak#使用阿里云镜像仓库地址重建CentOS-Base.repowget -O /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo#运行yum makecache生成缓存yum makecache

关闭所有服务器的防火墙(在某些组件(例如Dashboard)的防火墙端口开放范围不是很清楚的情况下选择将其关闭,生产环境最好按照K8S和相关组件官网的说明,保持防火墙开启状态下选择开放相关端口):

#临时关闭防火墙systemctl stop firewalld#永久关闭防火墙(禁用,避免重启后又打开)systemctl disable firewalld

设置所有服务器的/etc/hosts文件,做好hostname和IP的映射:

cat >> /etc/hosts << EOF192.168.17.3 master1192.168.17.4 master2192.168.17.5 master3192.168.17.11 node1192.168.17.12 node2192.168.17.13 node3192.168.17.200 lb # 后面将用于Keepalived的VIP(虚拟IP),如果不是高可用集群,该IP可以是master1的IPEOF

设置所有服务器的时间同步组件(K8S在进行某些集群状态的判断时(例如节点存活)需要有统一的时间进行参考):

#安装时间同步组件yum install -y ntpdate#对齐一下各服务器的当前时间ntpdate time.windows.com

禁用selinux(K8S在SELINUX方面的使用和控制还不是非常的成熟):

#临时禁用Selinuxsetenforce 0#永久禁用Selinuxsed -i "s/^SELINUX=enforcing$/SELINUX=permissive/" /etc/selinux/config

关闭操作系统swap功能(swap功能可能会使K8S在内存方面的QoS策略失效,进而影响内存资源紧张时的Pod驱逐):

#临时关闭swap功能swapoff -a#永久关闭swap功能sed -ri "s/.*swap.*/#&/" /etc/fstab

在每个节点上将桥接的IPv4流量配置为传递到iptables的处理链(K8S的Service功能的实现组件kube-proxy需要使用iptables来转发流量到目标pod):

#新建 /etc/sysctl.d/k8s.conf 配置文件cat > /etc/sysctl.d/k8s.conf << EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_forward = 1vm.swappiness = 0EOF#新建 /etc/modules-load.d/k8s.conf 配置文件cat > /etc/modules-load.d/k8s.conf << EOFbr_netfilteroverlayEOF#加载配置文件sysctl --system#加载br_netfilter模块(可以使 iptables 规则在 Linux Bridges 上面工作,用于将桥接的流量转发至iptables链)#如果没有加载br_netfilter模块,并不会影响不同node上的pod之间的通信,但是会影响同一node内的pod之间通过service来通信modprobe br_netfilter#加载网络虚拟化技术模块modprobe overlay#检验网桥过滤模块是否加载成功lsmod | grep -e br_netfilter -e overlay

K8S的service有基于iptables和基于ipvs两种代理模型,基于ipvs的性能要高一些,但是需要手动载入ipvs模块才能使用:

#安装ipset和ipvsadmyum install -y ipset ipvsadm#创建需要加载的模块写入脚本文件(高版本内核nf_conntrack_ipv4已经换成nf_conntrack)cat > /etc/sysconfig/modules/ipvs.modules << EOF#!/bin/bashmodprobe -- ip_vsmodprobe -- ip_vs_rrmodprobe -- ip_vs_wrrmodprobe -- ip_vs_shmodprobe -- nf_conntrackEOF#为上述为脚本添加执行权限chmod +x /etc/sysconfig/modules/ipvs.modules#执行上述脚本/bin/bash /etc/sysconfig/modules/ipvs.modules#查看上述脚本中的模块是否加载成功lsmod | grep -e -ip_vs -e nf_conntrack

在master1节点上使用RSA算法生成非对称加密公钥和密钥,并将公钥传递给其他节点(方便后面相同配置文件的分发传送,以及从master1上免密登录到其他节点进行集群服务器管理):

#使用RSA算法成生密钥和公钥,遇到输入,直接Enter即可ssh-keygen -t rsa#将公钥传送给其他几个节点(遇到(yes/no)的一路输入yes,并接着输入相应服务器的root密码)for i in master1 master2 master3 node1 node2 node3;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

Are you sure you want to continue connecting (yes/no)? yes/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keysroot@node3"s password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh "node3""and check to make sure that only the key(s) you wanted were added.

配置所有节点的limits:

#在所有节点上临时修改limitsulimit -SHn 65536#先在master1节点永久修改limitsvi /etc/security/limits.conf#在最后添加以下内容* soft nofile 65536* hard nofile 65536* soft nproc 4096* hard nproc 4096* soft memlock unlimited* soft memlock unlimited

然后将master1上的/etc/security/limits.conf文件复制到其他服务器节点上

scp /etc/security/limits.conf root@master2:/etc/security/limits.confscp /etc/security/limits.conf root@master3:/etc/security/limits.confscp /etc/security/limits.conf root@node3:/etc/security/limits.confscp /etc/security/limits.conf root@node2:/etc/security/limits.confscp /etc/security/limits.conf root@node1:/etc/security/limits.conf

在所有节点上安装Docker(K8S v1.24后推荐使用符合CRI标准的containerd或cri-o等作为容器运行时,原来用于支持Docker作为容器运行时的dockershim从该版本开始也已经从K8S中移除,如果还要坚持使用Docker的话,需要借助cri-dockerd适配器,性能没containerd和cri-o好):

#卸载旧docker相关组件yum remove -y docker*yum remove -y containerd*#下载docker-ce仓库的yum源配置wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo#安装特定版本的docker(要符合目标版本K8S的docker版本要求)yum install -y docker-ce-19.03.15 docker-ce-cli-19.03.15 containerd.io#配置docker(国内华中科技大学的镜像仓库、cgroup驱动为systemd等)mkdir -p /etc/dockersudo tee /etc/docker/daemon.json <<-"EOF"{ "exec-opts": ["native.cgroupdriver=systemd"], "registry-mirrors": ["https://docker.mirrors.ustc.edu.cn"], "log-driver":"json-file", "log-opts": {"max-size":"500m", "max-file":"3"}}EOF#立即启动Docker,并设置为开机自动启动 systemctl enable docker --now设置下载K8S相关组件的仓库为国内镜像yum源(国外的太慢了,这里设置为阿里云的):

cat > /etc/yum.repos.d/kubernetes.repo << EOF[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF

在master1、master2和master3节点上安装kubeadm、kubectl和kubelet三个工具(不要太新的版本,也不要太旧,否则很多基于K8S的周边工具和应用无法配对,比如kubeSphere、Rook等):

yum install -y kubeadm-1.20.2 kubectl-1.20.2 kubelet-1.20.2 --disableexcludes=kubernetes

在node1、node2和node3节点上安装kubeadm和kubelet两个工具:

yum install -y kubeadm-1.20.2 kubelet-1.20.2 --disableexcludes=kubernetes

在所有节点上立即启动kubelet,并设置为开机启动:

systemctl enable kubelet --now

在所有节点上将kubelet使用的cgroup drver与前面安装配置Docker时的对齐,都设置为systemd :

#先在master1上修改"/etc/sysconfig/kubelet"文件的内容vi /etc/sysconfig/kubelet#cgroup-driver使用systemd驱动KUBELET_EXTRA_ARGS="--cgroup-driver=systemd"#kube-proxy基于ipvs的代理模型KUBE_PROXY_MODE="ipvs"

然后将master1上的/etc/sysconfig/kubelet 文件复制到其他节点上

scp /etc/sysconfig/kubelet root@master2:/etc/sysconfig/kubeletscp /etc/sysconfig/kubelet root@master3:/etc/sysconfig/kubelet scp /etc/sysconfig/kubelet root@node3:/etc/sysconfig/kubelet scp /etc/sysconfig/kubelet root@node2:/etc/sysconfig/kubelet scp /etc/sysconfig/kubelet root@node1:/etc/sysconfig/kubelet

在所有节点上立即启动kubelet,并设置为开机自动启动

#立即启动kubelet,并设置为开机自动启动 systemctl enable kubelet --now #查看各节点上kubelet的运行状态(由于网络组件还没安装,此时kubelet会不断重启,属于正常现象) systemctl status kubelet

在master1、master2和master3节点上安装HAProxy和Keepalived高可用维护组件(仅高可用集群需要):

yum install -y keepalived haproxy

在master1节点上修改HAproxy配置文件/etc/haproxy/haproxy.cfg,修改好后分发到master2和master3:

#先备份HAProxy配置文件 cp /etc/haproxy/haproxy.cfg /etc/haproxy/haproxy.cfg.bak #修改HAProxy配置文件 vi /etc/haproxy/haproxy.cfg

global maxconn 2000 ulimit-n 16384 log 127.0.0.1 local0 err stats timeout 30s defaults log global mode http option httplog timeout connect 5000 timeout client 50000 timeout server 50000 timeout http-request 15s timeout http-keep-alive 15s frontend monitor-in bind *:33305 mode http option httplog monitor-uri /monitor listen stats bind *:8006 mode http stats enable stats hide-version stats uri /stats stats refresh 30s stats realm Haproxy\ Statistics stats auth admin:admin frontend k8s-master bind 0.0.0.0:16443 bind 127.0.0.1:16443 mode tcp option tcplog tcp-request inspect-delay 5s default_backend k8s-master backend k8s-master mode tcp option tcplog option tcp-check balance roundrobin default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100 # 下面的配置根据实际情况修改 server master1192.168.17.3:6443 check server master2192.168.17.4:6443 check server master3192.168.17.5:6443 check

然后将master1节点上的 /etc/haproxy/haproxy.cfg 文件复制到master2和master3节点上

scp /etc/haproxy/haproxy.cfg root@master2:/etc/haproxy/haproxy.cfgscp /etc/haproxy/haproxy.cfg root@master3:/etc/haproxy/haproxy.cfg

在master1、master2和master3节点上修改Keepalived的配置文件(注意各节点不一样的地方,红色标记):

#先备份Keepalived的配置文件 cp /etc/keepalived/keepalived.conf /etc/keepalived/keepalived.conf.bak #修改HAProxy配置文件 vi /etc/keepalived/keepalived.conf

! Configuration File for keepalivedglobal_defs { ## 标识本节点的字符串,通常为 hostname router_id master1 script_user root enable_script_security }## 检测脚本## keepalived 会定时执行脚本并对脚本执行的结果进行分析,动态调整 vrrp_instance 的优先级。如果脚本执行结果为 0,并且 weight 配置的值大于 0,则优先级相应的增加。如果脚本执行结果非 0,并且 weight配置的值小于 0,则优先级相应的减少。其他情况,维持原本配置的优先级,即配置文件中 priority 对应的值。vrrp_script chk_apiserver { script "/etc/keepalived/check_apiserver.sh" # 每2秒检查一次 interval 2 # 一旦脚本执行成功,权重减少5 weight -5 fall 3 rise 2}## 定义虚拟路由,VR_1 为虚拟路由的标示符,自己定义名称vrrp_instance VR_1 { ## 主节点为 MASTER,对应的备份节点为 BACKUP state MASTER ## 绑定虚拟 IP 的网络接口(网卡),与本机 IP 地址所在的网络接口相同 interface ens32 # 主机的IP地址 mcast_src_ip 192.168.17.3 # 虚拟路由id virtual_router_id 100 ## 节点优先级,值范围 0-254,MASTER 要比 BACKUP 高 priority 100 ## 优先级高的设置 nopreempt 解决异常恢复后再次抢占的问题 nopreempt ## 组播信息发送间隔,所有节点设置必须一样,默认 1s advert_int 2 ## 设置验证信息,所有节点必须一致 authentication { auth_type PASS auth_pass K8SHA_KA_AUTH } ## 虚拟 IP 池, 所有节点设置必须一样 virtual_ipaddress { ## VIP,可以定义多个 192.168.17.200 } track_script { chk_apiserver }}在master1节点上新建节点存活监控脚本 /etc/keepalived/check_apiserver.sh:

vi /etc/keepalived/check_apiserver.sh

#!/bin/bash err=0for k in $(seq 1 5)do check_code=$(pgrep kube-apiserver) if [[ $check_code == "" ]]; then err=$(expr $err + 1) sleep 5 continue else err=0 break fidone if [[ $err != "0" ]]; then echo "systemctl stop keepalived" /usr/bin/systemctl stop keepalived exit 1else exit 0fi

然后将master1节点上的/etc/keepalived/check_apiserver.sh 文件复制到master2和master3节点上:

scp /etc/keepalived/check_apiserver.sh root@master2:/etc/keepalived/check_apiserver.shscp /etc/keepalived/check_apiserver.sh root@master3:/etc/keepalived/check_apiserver.sh

各节点修改/etc/keepalived/check_apiserver.sh文件为可执行权限:

chmod +x /etc/keepalived/check_apiserver.sh

各节点立即启动HAProxy和Keepalived,并设置为开机启动:

#立即启动haproxy,并设置为开机启动systemctl enable haproxy --now#立即启动keepalived,并设置为开机启动systemctl enable keepalived --now

测试Keepalived维护的VIP是否畅通:

#在宿主机上(本例为Windows)ping 192.168.17.200正在 Ping 192.168.17.200 具有 32 字节的数据:来自 192.168.17.200 的回复: 字节=32 时间=1ms TTL=64来自 192.168.17.200 的回复: 字节=32 时间<1ms TTL=64来自 192.168.17.200 的回复: 字节=32 时间=1ms TTL=64来自 192.168.17.200 的回复: 字节=32 时间<1ms TTL=64192.168.17.200 的 Ping 统计信息: 数据包: 已发送 = 4,已接收 = 4,丢失 = 0 (0% 丢失),往返行程的估计时间(以毫秒为单位): 最短 = 0ms,最长 = 1ms,平均 = 0ms#在K8S各节点上ping 192.168.17.200 -c 4PING 192.168.17.200 (192.168.17.200) 56(84) bytes of data.64 bytes from 192.168.17.200: icmp_seq=1 ttl=64 time=0.058 ms64 bytes from 192.168.17.200: icmp_seq=2 ttl=64 time=0.055 ms64 bytes from 192.168.17.200: icmp_seq=3 ttl=64 time=0.077 ms64 bytes from 192.168.17.200: icmp_seq=4 ttl=64 time=0.064 ms--- 192.168.17.200 ping statistics ---4 packets transmitted, 4 received, 0% packet loss, time 3112msrtt min/avg/max/mdev = 0.055/0.063/0.077/0.011 ms

接下来我们在master1节点生成并配置kubeadm工具用于初始化K8S控制平面(即Master)的配置文件(这里命名为kubeadm-config.yaml):

#进入 /etc/kubernetes/ 目录cd /etc/kubernetes/#使用以下命令先将kubeadm默认的控制平台初始化配置导出来kubeadm config print init-defaults > kubeadm-config.yaml

生成的kubeadm-config.yaml文件内容如下(红色部分为要注意根据情况修改的,蓝色为新加的配置):

apiVersion: kubeadm.k8s.io/v1beta2bootstrapTokens:- groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authenticationkind: InitConfigurationlocalAPIEndpoint: advertiseAddress: 192.168.17.3#当前节点IP(各节点不同) bindPort: 6443nodeRegistration: criSocket: /var/run/dockershim.sock name: master1#当前节点hostname(各节点不同) taints: - effect: NoSchedule key: node-role.kubernetes.io/master---apiServer: timeoutForControlPlane: 4m0sapiVersion: kubeadm.k8s.io/v1beta2certificatesDir: /etc/kubernetes/pkiclusterName: kubernetescontrolPlaneEndpoint: "192.168.17.200:16443" #Keepalived维护的VIP和HAProxy维护的PortcontrollerManager: {}dns: type: CoreDNSetcd: local: dataDir: /var/lib/etcdimageRepository: registry.aliyuncs.com/google_containers #K8S内部拉取镜像时将使用的镜像仓库地址kind: ClusterConfigurationkubernetesVersion: v1.20.0networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/16 #K8S内部生成Service资源对象的IP时将使用的CIDR格式网段 podSubnet: 10.244.0.0/16 #K8S内部生成Pod资源对象的IP时将使用的CIDR格式网段,宿主机、Service、Pod三者的网段不能重叠scheduler: {}添加的podSubnet配置项与在命令行中的参与--pod-network-cidr是同一个参数,表明使用CNI标准的Pod的网络插件(后面的Calico就是)

然后将master1节点上的/etc/kubernetes/kubeadm-config.yaml 文件复制到master2和master3节点上,并修改上述标红的(各节点不同)部分:

scp /etc/kubernetes/kubeadm-config.yaml root@master2:/etc/kubernetes/kubeadm-config.yamlscp /etc/kubernetes/kubeadm-config.yaml root@master3:/etc/kubernetes/kubeadm-config.yaml

所有master节点使用刚刚的控制平台初始化配置文件/etc/kubernetes/kubeadm-config.yaml提前下载好初始化控制平台需要的镜像:

kubeadm config images pull --config /etc/kubernetes/kubeadm-config.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.20.0[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.20.0[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.20.0[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.20.0[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.2[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.4.13-0[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.7.0

然后将master1选为主节点(对应Keepalived的配置),使用配置文件/etc/kubernetes/kubeadm-config.yaml进行K8S控制平面的初始化(会在/etc/kubernetes目录下生成对应的证书和配置文件):

kubeadm init --config /etc/kubernetes/kubeadm-config.yaml --upload-certs

[init] Using Kubernetes version: v1.20.0[preflight] Running pre-flight checks[preflight] Pulling images required for setting up a Kubernetes cluster[preflight] This might take a minute or two, depending on the speed of your internet connection[preflight] You can also perform this action in beforehand using "kubeadm config images pull"[certs] Using certificateDir folder "/etc/kubernetes/pki"[certs] Generating "ca" certificate and key[certs] Generating "apiserver" certificate and key[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master1] and IPs [10.96.0.1 192.168.17.3 192.168.17.200][certs] Generating "apiserver-kubelet-client" certificate and key[certs] Generating "front-proxy-ca" certificate and key[certs] Generating "front-proxy-client" certificate and key[certs] Generating "etcd/ca" certificate and key[certs] Generating "etcd/server" certificate and key[certs] etcd/server serving cert is signed for DNS names [localhost master1] and IPs [192.168.17.3 127.0.0.1 ::1][certs] Generating "etcd/peer" certificate and key[certs] etcd/peer serving cert is signed for DNS names [localhost master1] and IPs [192.168.17.3 127.0.0.1 ::1][certs] Generating "etcd/healthcheck-client" certificate and key[certs] Generating "apiserver-etcd-client" certificate and key[certs] Generating "sa" key and public key[kubeconfig] Using kubeconfig folder "/etc/kubernetes"[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address[kubeconfig] Writing "admin.conf" kubeconfig file[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address[kubeconfig] Writing "kubelet.conf" kubeconfig file[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address[kubeconfig] Writing "controller-manager.conf" kubeconfig file[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address[kubeconfig] Writing "scheduler.conf" kubeconfig file[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"[kubelet-start] Starting the kubelet[control-plane] Using manifest folder "/etc/kubernetes/manifests"[control-plane] Creating static Pod manifest for "kube-apiserver"[control-plane] Creating static Pod manifest for "kube-controller-manager"[control-plane] Creating static Pod manifest for "kube-scheduler"[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s[apiclient] All control plane components are healthy after 16.635364 seconds[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace[kubelet] Creating a ConfigMap "kubelet-config-1.20" in namespace kube-system with the configuration for the kubelets in the cluster[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace[upload-certs] Using certificate key:1f0eae8f50417882560ecc7ec7f08596ed1910d9f90d237f00c69eb07b8fb64c[mark-control-plane] Marking the node master1 as control-plane by adding the labels "node-role.kubernetes.io/master=""" and "node-role.kubernetes.io/control-plane="" (deprecated)"[mark-control-plane] Marking the node master1 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule][bootstrap-token] Using token: abcdef.0123456789abcdef[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key[addons] Applied essential addon: CoreDNS[endpoint] WARNING: port specified in controlPlaneEndpoint overrides bindPort in the controlplane address[addons] Applied essential addon: kube-proxyYour Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/You can now join any number of the control-plane node running the following command on each as root: kubeadm join 192.168.17.200:16443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:4316261bbf7392937c47cc2e0f4834df9d6ad51fea47817cc37684a21add36e1 \ --control-plane --certificate-key 1f0eae8f50417882560ecc7ec7f08596ed1910d9f90d237f00c69eb07b8fb64cPlease note that the certificate-key gives access to cluster sensitive data, keep it secret!As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.17.200:16443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:4316261bbf7392937c47cc2e0f4834df9d6ad51fea47817cc37684a21add36e1

如果初始化失败,可以使用以下命清理一下,然后重新初始化:

kubeadm reset -fipvsadm --clearrm -rf ~/.kube

初始化完master1节点上的k8s控制平面后生成的文件夹和文件内容如下图所示:

根据master1上控制平台初化始成功后的提示,我们继续完成高可用集群搭建的剩余工作。

为常规的普通用户执行以下命令:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

为 root 用户执行以下命令:

export KUBECONFIG=/etc/kubernetes/admin.conf

请确保你一定执行了上述命令,否则你可能会遇到如下错误:

[root@master1 kubernetes]# kubectl get csThe connection to the server localhost:8080 was refused - did you specify the right host or port?

在master2和master3节点上分别执行以下命令将相应节点加入到集群成为控制平面节点(即主节点):

kubeadm join 192.168.17.200:16443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:4316261bbf7392937c47cc2e0f4834df9d6ad51fea47817cc37684a21add36e1 \ --control-plane --certificate-key 1f0eae8f50417882560ecc7ec7f08596ed1910d9f90d237f00c69eb07b8fb64c

This node has joined the cluster and a new control plane instance was created:* Certificate signing request was sent to apiserver and approval was received.* The Kubelet was informed of the new secure connection details.* Control plane (master) label and taint were applied to the new node.* The Kubernetes control plane instances scaled up.* A new etcd member was added to the local/stacked etcd cluster.To start administering your cluster from this node, you need torun the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/configRun "kubectl get nodes" to see this node join the cluster.

根据提示,在master2和master3节点上分别执行以下命令:

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/config

在node1、node2和node3节点上分别执行以下命令将相应节点加入到集群成为工作节点:

kubeadm join 192.168.17.200:16443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:4316261bbf7392937c47cc2e0f4834df9d6ad51fea47817cc37684a21add36e1

此时查看集群的节点列表,发现均处于NotReady 状态:

[root@master1 ~]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster1 NotReady control-plane,master 5m59s v1.20.2master2 NotReady control-plane,master 4m48s v1.20.2master3 NotReady control-plane,master 4m1s v1.20.2node1 NotReady30s v1.20.2node2 NotReady 11s v1.20.2node3 NotReady 6s v1.20.2

此时查看集群的Pod列表,发现coredns相关的pod也处理 Pending 状态:

[root@master1 ~]# kubectl get pods -ALLNAMESPACE NAME READY STATUS RESTARTS AGE Lkube-system coredns-7f89b7bc75-252wl 0/1 Pending 0 6m12s kube-system coredns-7f89b7bc75-7952s 0/1 Pending 0 6m12s kube-system etcd-master1 1/1 Running 0 6m19s kube-system etcd-master2 1/1 Running 0 5m6s kube-system etcd-master3 1/1 Running 0 4m20s kube-system kube-apiserver-master1 1/1 Running 0 6m19s kube-system kube-apiserver-master2 1/1 Running 0 5m9s kube-system kube-apiserver-master3 1/1 Running 1 4m21s kube-system kube-controller-manager-master1 1/1 Running 1 6m19s kube-system kube-controller-manager-master2 1/1 Running 0 5m10s kube-system kube-controller-manager-master3 1/1 Running 0 3m17s kube-system kube-proxy-68q8f 1/1 Running 0 34s kube-system kube-proxy-6m986 1/1 Running 0 5m11s kube-system kube-proxy-8fklt 1/1 Running 0 29s kube-system kube-proxy-bl2rx 1/1 Running 0 53s kube-system kube-proxy-pth46 1/1 Running 0 4m24s kube-system kube-proxy-r65vj 1/1 Running 0 6m12s kube-system kube-scheduler-master1 1/1 Running 1 6m19s kube-system kube-scheduler-master2 1/1 Running 0 5m9s kube-system kube-scheduler-master3 1/1 Running 0 3m22s

上述状态都是因为集群还没有安装Pod的网格插件,接下来就为集群安装一个名为Calico的网络插件!

使用 "kubectl apply -f [podnetwork].yaml"为K8S集群安装Pod网络插件,用于集群内Pod之间的网络连接建立(不同节点间的Pod直接用其Pod IP通信,相当于集群节点对于Pod透明),我们这里选用符合CNI(容器网络接口)标准的calico网络插件(flanel也是可以的):

首选,从Calico的版本发行说明站点 https://docs.tigera.io/archive找到兼容你所安装的K8S的版本,里面同时也有安装配置说明,如下截图所示:

根据安装提示,我们执行以下命令进行Calico网络插件的安装:

#安装Calico Operatorkubectl create -f https://docs.projectcalico.org/archive/v3.19/manifests/tigera-operator.yaml

如果上述步骤无法直接应用目录站点上的配置进行安装,可以直接在浏览器打开目标URL,将内容复制下来创建一个yaml文件再应用(例如创建一个/etc/kubernetes/calico-operator.yaml文件(其内容就是tigera-operator.yaml文件的内容)):

kubectl create -f /etc/kubernetes/calico-operator.yaml

接下来是Calico的资源定义文件的应用,这里我不直接使用在线文件进行应用,而是直接在浏览器打开目标URL,将内容复制下来创建一个yaml文件再应用(例如创建一个/etc/kubernetes/calico-resources.yaml文件(其内容就是tigera-operator.yaml文件的内容)),更改一下cidr网段配置,对齐之前kubeadm-config.yaml文件里的podSubnet的值:

#直接应用在线文件的方式(我们这里不这样做,因为要修改cidr地址)kubectl create -f https://docs.projectcalico.org/archive/v3.19/manifests/custom-resources.yaml

# This section includes base Calico installation configuration.# For more information, see: https://docs.projectcalico.org/v3.19/reference/installation/api#operator.tigera.io/v1.InstallationapiVersion: operator.tigera.io/v1kind: Installationmetadata: name: defaultspec: # Configures Calico networking. calicoNetwork: # Note: The ipPools section cannot be modified post-install. ipPools: - blockSize: 26 cidr: 10.244.0.0/16 encapsulation: VXLANCrossSubnet natOutgoing: Enabled nodeSelector: all()

kubectl create -f /etc/kubernetes/calico-resources.yaml

执行完相关命令后,可以使用以下命令监控Calico相关Pod的部署进度(下载镜像需要一定时间,耐心等待!):

watch kubectl get pods -n calico-system

待等Calico的部署完成后,使用以下命令查看一下当前集群内所有Pod的运行状态(发现所有Pod都处于 Running 状态了):

[root@master1 kubernetes]# kubectl get pods -ALLNAMESPACE NAME READY STATUS RESTARTS AGE Lcalico-system calico-kube-controllers-6564f5db75-9smlm 1/1 Running 0 7m34s calico-system calico-node-5xg5s 1/1 Running 0 7m34s calico-system calico-node-dzmrl 1/1 Running 0 7m34s calico-system calico-node-fh5tt 1/1 Running 0 7m34s calico-system calico-node-ngpgx 1/1 Running 0 7m34s calico-system calico-node-qxkbd 1/1 Running 0 7m34s calico-system calico-node-zmtdc 1/1 Running 0 7m34s calico-system calico-typha-99996595b-lm7rh 1/1 Running 0 7m29s calico-system calico-typha-99996595b-nwbcz 1/1 Running 0 7m29s calico-system calico-typha-99996595b-vnzpl 1/1 Running 0 7m34s kube-system coredns-7f89b7bc75-252wl 1/1 Running 0 39m kube-system coredns-7f89b7bc75-7952s 1/1 Running 0 39m kube-system etcd-master1 1/1 Running 0 39m kube-system etcd-master2 1/1 Running 0 38m kube-system etcd-master3 1/1 Running 0 37m kube-system kube-apiserver-master1 1/1 Running 0 39m kube-system kube-apiserver-master2 1/1 Running 0 38m kube-system kube-apiserver-master3 1/1 Running 1 37m kube-system kube-controller-manager-master1 1/1 Running 1 39m kube-system kube-controller-manager-master2 1/1 Running 0 38m kube-system kube-controller-manager-master3 1/1 Running 0 36m kube-system kube-proxy-68q8f 1/1 Running 0 33m kube-system kube-proxy-6m986 1/1 Running 0 38m kube-system kube-proxy-8fklt 1/1 Running 0 33m kube-system kube-proxy-bl2rx 1/1 Running 0 33m kube-system kube-proxy-pth46 1/1 Running 0 37m kube-system kube-proxy-r65vj 1/1 Running 0 39m kube-system kube-scheduler-master1 1/1 Running 1 39m kube-system kube-scheduler-master2 1/1 Running 0 38m kube-system kube-scheduler-master3 1/1 Running 0 36m tigera-operator tigera-operator-6fdc4c585-lk294 1/1 Running 0 8m1s [root@master1 kubernetes]#View Code

待等Calico的部署完成后,使用以下命令查看当前集群节点的状态(发现所有的节点都已处理 Ready 状态了):

[root@master1 kubernetes]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster1 Ready control-plane,master 49m v1.20.2master2 Ready control-plane,master 48m v1.20.2master3 Ready control-plane,master 47m v1.20.2node1 Ready43m v1.20.2node2 Ready 43m v1.20.2node3 Ready 43m v1.20.2

如果你打算让Master节点也参与到平常的Pod调度(生产环境一般不会这样做,以保证master节点的稳定性),那么你需要使用以下命令将Master节点上的 taint(污点标记)解除:

kubectl taint nodes --all node-role.kubernetes.io/master-

最后我们使用以下命令查看当前集群的状态,发现Scheduler和Controller Manager组件处理不健康状态:

[root@master1 ~]# kubectl get csWarning: v1 ComponentStatus is deprecated in v1.19+NAME STATUS MESSAGE ERRORscheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused etcd-0 Healthy {"health":"true"}解决上述问题需要将每个Master节点上的 /etc/kubernetes/manifests/kube-scheduler.yaml和/etc/kubernetes/manifests/kube-controller-manager.yaml文件中的- --port=0注释掉(即前面加一个#),这里以/etc/kubernetes/manifests/kube-scheduler.yaml文件为例:

[root@master1 ~]# vi /etc/kubernetes/manifests/kube-scheduler.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels: component: kube-scheduler tier: control-plane name: kube-scheduler namespace: kube-systemspec: containers: - command: - kube-scheduler - --authentication-kubeconfig=/etc/kubernetes/scheduler.conf - --authorization-kubeconfig=/etc/kubernetes/scheduler.conf - --bind-address=127.0.0.1 - --kubeconfig=/etc/kubernetes/scheduler.conf - --leader-elect=true #- --port=0 image: registry.aliyuncs.com/google_containers/kube-scheduler:v1.20.0 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 8 httpGet: host: 127.0.0.1 path: /healthz port: 10259 scheme: HTTPS initialDelaySeconds: 10

然后重启一下各Master节点上的kubelet即可:

systemctl restart kubelet

[root@master1 ~]# kubectl get csWarning: v1 ComponentStatus is deprecated in v1.19+NAME STATUS MESSAGE ERRORscheduler Healthy ok controller-manager Healthy ok etcd-0 Healthy {"health":"true"} 最后,我们再为K8S部署一个Web应用经常用到的服务路由和负载均衡组件——Ingress。

K8S Ingress是一个将针对K8S集群内service的外部请求(南北流量)转发到后端pod的组件,它转发的流量会跳过kube-proxy直达目标pod(kube-proxy用于转发Pod间的东西流量)。绝大多数Ingress 只能管控七层网络流量(目前只支持HTTP/HTTPS协议请求的转发,TCP/IP层的由NodePort或LoadBalancer负责)。下图是K8S官网给出的Ingress流量转发示意图:

目前K8S官方维护的Ingress控制器有三个:AWS、GCE和Nginx Ingress,前两个为专门针对亚马逊公有云和Google公有云的,这里我们择NginxIngress(还有其他很多第三方的 Ingress ,例如Istio Ingress、KongIngress、HAProxyIngress等等)

从Github上的Ingress NGINX Controller项目的readme页面查找到当前K8S版本(v1.20.2)支持的Ingress-Nginx版本有v1.0.0~v1.3.1,这里我们选择v1.3.1版本(对应的Nginx版本为1.19.10+)。从Github上的tag列表里下载相应版本的Source code包,解压后从deploy\static\provider\cloud目录中找到deploy.yaml文件,该文件包含了Ingress-Nginx的所有K8S资源定义:

修改deploy.yaml文件中的以下资源定义(红色为修改过的部分):

---apiVersion: apps/v1kind: Deploymentmetadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.3.1 name: ingress-nginx-controller namespace: ingress-nginxspec:...省略... image: registry.aliyuncs.com/google_containers/nginx-ingress-controller:v1.3.1 imagePullPolicy: IfNotPresent lifecycle:...省略...---apiVersion: batch/v1kind: Jobmetadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.3.1 name: ingress-nginx-admission-create namespace: ingress-nginxspec:...省略... image: registry.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0 imagePullPolicy: IfNotPresent name: create...省略... ---apiVersion: batch/v1kind: Jobmetadata: labels: app.kubernetes.io/component: admission-webhook app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.3.1 name: ingress-nginx-admission-patch namespace: ingress-nginxspec:...省略... image: registry.aliyuncs.com/google_containers/kube-webhook-certgen:v1.3.0 imagePullPolicy: IfNotPresent name: patch...省略... ---apiVersion: networking.k8s.io/v1kind: IngressClassmetadata: labels: app.kubernetes.io/component: controller app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/name: ingress-nginx app.kubernetes.io/part-of: ingress-nginx app.kubernetes.io/version: 1.3.1 name: nginx annotations: ingressclass.kubernetes.io/is-default-class: "true" spec: controller: k8s.io/ingress-nginx...省略...

其中ingressclass.kubernetes.io/is-default-class: "true"注解将 ingress-nginx作为K8S集群的默认Ingress Class。

然后将修改好的deploy.yaml文件上传到master1节点的 /etc/kubernetes/ingress/nginx 目录下(mkdir -p创建),接着在master1节点上使用以下命令就可以部署好Ingress Nginx Controller了:

kubectl apply -f /etc/kubernetes/ingress/nginx/deploy.yaml#使用以下命令监控部署进度(ingress-nginx为命名空间)watch kubectl get pods -n ingress-nginx

至此,恭喜你已经成功搭建好一个高可用的三主三从的K8S集群!

以后部署任何应用时,记得第一个想想能否直接跑在K8S集群上!

在此基础上我们可以尝试一下直接使用 containerd作为容器运行时的高可用K8S集群搭建!

最后,K8S是ServiceMesh的基础,是云原生的核心基础!

-

-

Android JetPack~ LiveData (一) 介绍与使用

一般情况下LiveData都是搭配这ViewModel使用,这里先介绍一下LiveData,再结合ViewModel使用Android数据...

来源: -

-

CentOS7.9安装K8S高可用集群(三主三从)

Android JetPack~ LiveData (一) 介绍与使用

环球观热点:SDK多项目开发与联调

全球快看:期末复习——线程

环球热点评!5年前就已杀青 周星驰《美人鱼2》进入后期制作

谨慎升级 等了2个月的AMD新驱动疑似翻车:系统崩了

世界热议:央广网:“暴雪式”傲慢引众怒 或终将致其失去中国市场

要闻速递:中国移动:加速千兆网络全面普及 建成全球最大规模光网络

中国快递卷了15年:死死困住了一个50岁的老快递员

纽约黄金期货周三收跌1.1% 创五周来最低收盘价

1998年属虎的人2012年运程

今日聚焦!qq情侣网名 〈談情》**/|(說爰)ⅱ,

80年代的零食大全怀旧辣条_80年代的零食大全怀旧

【保姆级】Python最新版3.11.1开发环境搭建,看这一篇就够了(适用于Python3.11.2安装)

【天天新要闻】day10-1-中文乱码处理

实时焦点:一次学俩Vue&Blazor:1.4基础-响应式数据

数论模板

世界微速讯:2023年春运收官:40天发送旅客近16亿人次 大涨50%

【环球报资讯】数据结构刷题2023.02.15小记

给我两分钟的时间:微博风格九宫格:UICollectionView实现

《分布式技术原理与算法解析》学习笔记Day12

【算法训练营day45】LeetCode70. 爬楼梯(进阶) LeetCode322. 零钱兑换 LeetCode279. 完全平方数

香港2022年暴力罪案同比下降7.9%

观天下!探险的好处辩论赛,正方_探险的好处

全球播报:AMD RX 7600S游戏本显卡首测:远不如RTX 3060!高端弃疗了

世界速讯:10道恋爱送命题灵魂拷问ChatGPT:它的回答让我陷入沉思

马斯克向往每周只工作80小时!网友:驴都不敢这么用

每日速读!中国内地特供!Intel i5-13490F处理器图赏

天天快看:002. html篇之《表格》

当前视讯!力扣---3. 无重复字符的最长子串

今日热文:轻薄长续航!小新Air14超极本2023发布:标配13代酷睿+1TB SSD

普及1TB 联想小新2023笔记本、一体机价格汇总:最贵才8999元

每日资讯:8999元 联想小新Pro 27一体机发布:13代酷睿i9搭配Arc独显

环球微头条丨4199元起 小新14/16轻薄本2023发布:酷睿i5-1340P、高配1TB SSD

播报:三星Galaxy S23 Ultra发布 老外怒赞:安卓阵营老大

最资讯丨【LeetCode栈与队列#05】滑动窗口最大值

如何优雅的在 Word 中添加漂亮的代码?

今日热文:2023.02.15.差分

热头条丨公积金月汇缴额是什么意思

当前热讯:用微信传播盗版电影被查:向两百多人分享《流浪地球2》《满江红》链接

新资讯:日本独居雌长臂猿突然产崽!孩子的父亲竟还是自己的“爷爷”

环球最新:Lady Gaga《小丑2》剧照首曝:疯狂的小丑女登场!

环球速讯:联想小新官宣接入百度“文心一言”:可在桌面一键直达

当前通讯!新能源车企现最大跌幅背后:涨价、营销、刺激消费的“国补退坡游戏”

【环球速看料】数据类型之字符串、数据类型之列表、数据类型之字典、数据类型之布尔值、数据类型之元组、数据类型之集合、与用户交互、格式化输出、基本运算符

每日热讯!数字化开采|AIRIOT智慧矿山自动化生产解决方案

全球视讯!wagger也不好用了!API文档还得是Apipost

世界信息:Web 页面之间传递参数

Java开发工具IntelliJ IDEA 2020.2完整授权流程

观速讯丨一加显示器E 24上市:24寸IPS屏、18W PD输出

环球最新:网易代理《迷室3》《迷室:往逝》经典手游宣布停运:数据全清空

硬蹭名气?《中国式相亲2》非《中国式家长》团队作品

热议:桂格燕麦诞生于俄亥俄引网友关注 客服:产品与俄亥俄无关

环球微动态丨电量低于20%赶快充电!雅迪电动车保养攻略来了:关乎安全 车主必看

前沿热点:M值如何兑换话费

焦点观察:记录--『uni-app、小程序』蓝牙连接、读写数据全过程

全球速看:PostgreSQL重要参数解析及优化

天天新动态:openeuler加载dpdk驱动模块

【全球报资讯】(数据库系统概论|王珊)第三章关系数据库标准语言SQL-第六、七节:视图

环球热头条丨【算法训练营day44】完全背包基础 LeetCode518. 零钱兑换II LeetCode377. 组合总和IV

天天看热讯:想要模仿LPL,DRX赛后发漫画,却被Gen官方拉黑了?

焦点速读:Win11下月喜迎更新大礼包:10GB补丁 重启次数更少

【环球播资讯】员工发现老板娘偷看同事微信:火速离职

天天精选!车标成伤人凶器 日产召回超40万辆汽车

索尼降噪耳机新秀!WH-CH720N意外偷跑:升级蓝牙5.2

大国重器 首台国产HA级重型燃机下线:未来将100%零排放

焦点!字体查看小工具 -- (采用wpf开发)

【算法训练营day43】LeetCode1049. 最后一块石头的重量II LeetCode494. 目标和 LeetCode474. 一和零

每日看点!独立包装:大牌N95口罩25片9.9元到手

环球观速讯丨贾跃亭名下已无财产可执行:无车辆、不动产

【环球报资讯】苹果逐渐向OLED过渡!替换掉mini LED

世界消息!羊毛没了 Steam阿根廷区《卧龙》价格暴涨一倍

焦点讯息:一看就能装!奇瑞iCar原厂趣改套件上市:3389元起

你应该知道的微信小程序游戏技术❗️❗️

【算法题--异或操作】找出数组中唯一没有重复的那个元素

极兔一面:Dockerfile如何优化?注意:千万不要只说减少层数

环球时讯:怎样的目标管理能真正实现目标?做到这3点就对了

开心档之Java 流(Stream)、文件(File)和IO

【焦点热闻】舍利子制作方法居然也有发明专利 网友:得道高僧等级速升外挂

世界即时:跑腿师傅诉苦:男子订一束花送五个女生均被拒 还被扣款差评

微软将用UUP方式推送.NET更新:“可选更新”终于可控

钉钉iOS版喜迎更新:支持定时消息 再不怕打扰别人休息了

天天热讯:《星际争霸2》新晋中国世界冠军李培楠:别给暴雪送钱 不要买!

天天最新:CSS 盒模型和 box-sizing 属性

环球讯息:全志h616,Ubuntu,python3.9环境搭建

【全球新要闻】【关系型数据库】事务特性及事务隔离级别

软件自动化测试高频面试题

环球百事通!游戏帧数暴增84% 英特尔锐炫显卡新老驱动对比

当前动态:山东多人无视劝阻赶海 1人溺水遇难:必须小心这4点

世界即时看!价值7万的爱马仕包运输途中被烧毁!顺丰:如果是我们的问题 会进行处理

天天速看:男子给女友转账140万分手想要回:女方最终被判返还40万

每日热门:媒体曝苹果扩大在印产量障碍重重:这品控看完避雷

新资讯:时隔多年,这次我终于把动态代理的源码翻了个地儿朝天

环球速读:AI照骗恐怖如斯!美女刷屏真假难辨 网友:警惕AI网恋诈骗

【全球时快讯】万元级最香!ROG四款满血笔记本齐上阵:魔霸新锐2023首发9999元手慢无

SQL工具性能实测:居然比Navicat还快,数百万行数据导出仅51秒

当前要闻:从上至下遍历二叉树---队列的性质

全球焦点!00后男生长期把可乐当水喝:牙全坏

《巫师3》次时代版热修复上线:解决4.01版性能问题

MINI纯电Countryman谍照