最新要闻

- 海贼王:“七武海”原型揭秘!居然来自30年前游戏《浪漫沙加2》

- 举报比亚迪排放不达标!长城汽车晒业绩:1-4月同比增长99.1% 买它还是比亚迪?_每日精选

- 焦点播报:16核R9 7945HX加持!联想公布新版拯救者R9000P参数

- 世界百事通!法拉第未来官宣:FF 91第一阶段交付5月31日开始 车主先培训

- 环球视讯!促进跨区域产业链、供应链、创新链、资金链、人才链深度融合,一大批长三角G60科创走廊跨区域合作重点项目签约

- 广州市花都区秀全中学:720分以上学生可以考虑秀中清北班-世界微头条

- 今日上映!《小美人鱼》豆瓣评论:难以接受黑人鱼、强凑CP、毁童年-焦点热门

- 即时:宝德暴芯x86处理器现身GeekBench 5:坐实就是Intel i3-10105

- 知名演员罗京民去世 曾饰演许三多的爹:张译等人发文悼念 焦点速递

- ST浩源:截至2023年5月20日,公司股东14658户,谢谢对公司的关注!|天天最新

- 升级天玑8200处理器:vivo S17 Pro现身Geekbench

- 曝小米13 Ultra欧洲售价超1.1万元:比iPhone 14 Pro、华为P60 Pro都贵_世界今日讯

- 全球球精选!破首发仅7499元!华硕天选4游戏本配锐龙9与RTX4060:高性价比真香

- 热点!散了吧!特斯拉车顶维权女车主败诉:刹车失灵观点站不住脚 没任何证据证明

- 《变形金刚7:超能勇士崛起》超燃特辑出炉:保时捷964街头飞车_热资讯

- 上市公司实控人离婚140亿归女方,盘点彤程新材投资版图

广告

手机

iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

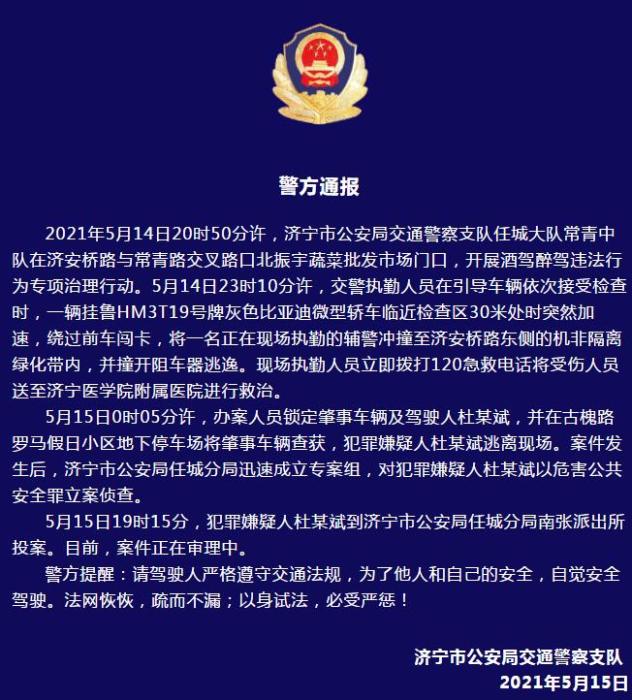

警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

- 警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- 男子被关545天申国赔:获赔18万多 驳回精神抚慰金

- 3天内26名本土感染者,辽宁确诊人数已超安徽

- 广西柳州一男子因纠纷杀害三人后自首

- 洱海坠机4名机组人员被批准为烈士 数千干部群众悼念

家电

尚硅谷Hadoop的WordCount案例实操练习出现的bug-环球新视野

(资料图片仅供参考)

(资料图片仅供参考)

报错日志和exception如下:

点击查看代码

SLF4J: Class path contains multiple SLF4J bindings.SLF4J: Found binding in [jar:file:/D:/Environment/RepMaven/org/slf4j/slf4j-reload4j/1.7.36/slf4j-reload4j-1.7.36.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: Found binding in [jar:file:/D:/Environment/RepMaven/org/slf4j/slf4j-log4j12/1.7.30/slf4j-log4j12-1.7.30.jar!/org/slf4j/impl/StaticLoggerBinder.class]SLF4J: See http://www.slf4j.org/codes.html#multiple_bindings for an explanation.SLF4J: Actual binding is of type [org.slf4j.impl.Reload4jLoggerFactory]2023-05-26 13:55:26,083 WARN [org.apache.hadoop.util.NativeCodeLoader] - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 2023-05-26 13:55:26,580 WARN [org.apache.hadoop.metrics2.impl.MetricsConfig] - Cannot locate configuration: tried hadoop-metrics2-jobtracker.properties,hadoop-metrics2.properties 2023-05-26 13:55:26,783 INFO [org.apache.hadoop.metrics2.impl.MetricsSystemImpl] - Scheduled Metric snapshot period at 10 second(s). 2023-05-26 13:55:26,783 INFO [org.apache.hadoop.metrics2.impl.MetricsSystemImpl] - JobTracker metrics system started 2023-05-26 13:55:27,228 WARN [org.apache.hadoop.mapreduce.JobResourceUploader] - Hadoop command-line option parsing not performed. Implement the Tool interface and execute your application with ToolRunner to remedy this. 2023-05-26 13:55:27,351 WARN [org.apache.hadoop.mapreduce.JobResourceUploader] - No job jar file set. User classes may not be found. See Job or Job#setJar(String). 2023-05-26 13:55:27,383 INFO [org.apache.hadoop.mapreduce.JobSubmitter] - Cleaning up the staging area file:/tmp/hadoop/mapred/staging/yeqiu523558444/.staging/job_local523558444_0001 Exception in thread "main" java.lang.UnsatisfiedLinkError: "boolean org.apache.hadoop.io.nativeio.NativeIO$Windows.access0(java.lang.String, int)"at org.apache.hadoop.io.nativeio.NativeIO$Windows.access0(Native Method)at org.apache.hadoop.io.nativeio.NativeIO$Windows.access(NativeIO.java:793)at org.apache.hadoop.fs.FileUtil.canRead(FileUtil.java:1249)at org.apache.hadoop.fs.FileUtil.list(FileUtil.java:1454)at org.apache.hadoop.fs.RawLocalFileSystem.listStatus(RawLocalFileSystem.java:601)at org.apache.hadoop.fs.FileSystem.listStatus(FileSystem.java:1972)at org.apache.hadoop.fs.FileSystem.listStatus(FileSystem.java:2014)at org.apache.hadoop.fs.FileSystem$4.(FileSystem.java:2180)at org.apache.hadoop.fs.FileSystem.listLocatedStatus(FileSystem.java:2179)at org.apache.hadoop.fs.ChecksumFileSystem.listLocatedStatus(ChecksumFileSystem.java:783)at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.singleThreadedListStatus(FileInputFormat.java:320)at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.listStatus(FileInputFormat.java:279)at org.apache.hadoop.mapreduce.lib.input.FileInputFormat.getSplits(FileInputFormat.java:404)at org.apache.hadoop.mapreduce.JobSubmitter.writeNewSplits(JobSubmitter.java:310)at org.apache.hadoop.mapreduce.JobSubmitter.writeSplits(JobSubmitter.java:327)at org.apache.hadoop.mapreduce.JobSubmitter.submitJobInternal(JobSubmitter.java:200)at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1571)at org.apache.hadoop.mapreduce.Job$11.run(Job.java:1568)at java.base/java.security.AccessController.doPrivileged(AccessController.java:712)at java.base/javax.security.auth.Subject.doAs(Subject.java:439)at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1878)at org.apache.hadoop.mapreduce.Job.submit(Job.java:1568)at org.apache.hadoop.mapreduce.Job.waitForCompletion(Job.java:1589)at com.atgui.mapreduce.wordcount.WordCountDriver.main(WordCountDriver.java:47) 点击查看代码

org.apache.hadoop hadoop-client 3.3.4 junit junit 4.12 org.slf4j slf4j-log4j12 1.7.30 点击查看代码

package com.atgui.mapreduce.wordcount;import org.apache.hadoop.io.IntWritable;import org.apache.hadoop.io.LongWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.mapreduce.Mapper;import java.io.IOException;public class WordCountMapper extends Mapper { private Text outK = new Text(); private IntWritable outV = new IntWritable(1); @Override protected void map(LongWritable key, Text value,Context context) throws IOException, InterruptedException { // 1 获取一行 // atguigu atguigu String line = value.toString(); // 2 切割 // atguigu // atguigu String[] words = line.split(" "); // 3 循环写出 for (String word : words) { // 封装outK outK.set(word); // 写出 context.write(outK, outV); } }} 点击查看代码

package com.atgui.mapreduce.wordcount;import org.apache.hadoop.io.IntWritable;import org.apache.hadoop.mapreduce.Reducer;import org.w3c.dom.Text;import java.io.IOException;public class WordCountReducer extends Reducer { private IntWritable outV = new IntWritable(); @Override protected void reduce(Text key, Iterable values, Reducer.Context context) throws IOException, InterruptedException { int sum = 0; // atguigu,(1,1) for (IntWritable value : values) { sum += value.get(); } outV.set(sum); // 写出 context.write(key, outV); }} 点击查看代码

package com.atgui.mapreduce.wordcount;import org.apache.hadoop.conf.Configuration;import org.apache.hadoop.fs.Path;import org.apache.hadoop.io.IntWritable;import org.apache.hadoop.io.Text;import org.apache.hadoop.mapreduce.Job;import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;import java.io.IOException;public class WordCountDriver {// static {// try {// System.load("D:\\Environment\\hadoop-3.1.0\\bin\\hadoop.dll");// } catch (UnsatisfiedLinkError e) {// System.err.println("Native code library failed to load.\n" + e);// System.exit(1);// }// } public static void main(String[] args) throws IOException, InterruptedException, ClassNotFoundException { // 1 获取job Configuration conf = new Configuration(); Job job = Job.getInstance(conf); // 2 设置jar包路径 job.setJarByClass(WordCountDriver.class); // 3 关联mapper和reducer job.setMapperClass(WordCountMapper.class); job.setReducerClass(WordCountReducer.class); // 4 设置map输出的kv类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class); // 5 设置最终输出的kv类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); // 6 设置输入路径和输出路径 FileInputFormat.setInputPaths(job,new Path("D:\\Environment\\input\\inputword")); FileOutputFormat.setOutputPath(job,new Path("D:\\Environment\\hadoop\\output1")); // 7 提交job boolean result = job.waitForCompletion(true); System.exit(result? 0 : 1); }}询问Claude,回答如下:

这个错误是由于WordCount程序在Windows系统上运行时,尝试调用了Hadoop的NativeIO类的access0方法,但无法找到正确的JNI库导致的UnsatisfiedLinkError异常。NativeIO类是Hadoop用来执行一些本地文件操作的类,它依赖于JNI来调用Windows的API。在Windows上运行Hadoop程序时,需要从Hadoop发行包中copy出对应的winutils.exe和hadoop.dll两个文件,并设置相关环境变量,Hadoop程序才能正确加载这两个文件,执行本地文件操作。 所以,导致这个错误的原因是:

没有从Hadoop发行包中获取winutils.exe和hadoop.dll两个文件。

获取了这两个文件,但环境变量设置不正确,Hadoop无法加载它们。

解决方案:在你的driver类中加下面代码,如果可以执行就说明是hadoop.dll的问题

static { try { System.load("D:\\Environment\\hadoop-3.1.0\\bin\\hadoop.dll"); } catch (UnsatisfiedLinkError e) { System.err.println("Native code library failed to load.\n" + e); System.exit(1); } }关键词:

-

尚硅谷Hadoop的WordCount案例实操练习出现的bug-环球新视野

这个错误是由于WordCount程序在Windows系统上运行时,尝试调用了Hadoop的NativeIO类的access0方法,但无法找

来源: -

-

-

尚硅谷Hadoop的WordCount案例实操练习出现的bug-环球新视野

JS 里如何实现异步?

如何在上架App之前设置证书并上传应用|全球今头条

海贼王:“七武海”原型揭秘!居然来自30年前游戏《浪漫沙加2》

举报比亚迪排放不达标!长城汽车晒业绩:1-4月同比增长99.1% 买它还是比亚迪?_每日精选

焦点播报:16核R9 7945HX加持!联想公布新版拯救者R9000P参数

世界百事通!法拉第未来官宣:FF 91第一阶段交付5月31日开始 车主先培训

环球视讯!促进跨区域产业链、供应链、创新链、资金链、人才链深度融合,一大批长三角G60科创走廊跨区域合作重点项目签约

广州市花都区秀全中学:720分以上学生可以考虑秀中清北班-世界微头条

易基因:MeRIP-seq等揭示m6A reader YTHDF1在结直肠癌PD-1免疫治疗中的作用|Gut 全球实时

手把手教你在昇腾平台上搭建PyTorch训练环境_天天新要闻

今日上映!《小美人鱼》豆瓣评论:难以接受黑人鱼、强凑CP、毁童年-焦点热门

即时:宝德暴芯x86处理器现身GeekBench 5:坐实就是Intel i3-10105

知名演员罗京民去世 曾饰演许三多的爹:张译等人发文悼念 焦点速递

ST浩源:截至2023年5月20日,公司股东14658户,谢谢对公司的关注!|天天最新

每个.NET开发都应该知道的10个.NET库

关于AWS中VPC下的IGW-internet gateway的创建与说明

升级天玑8200处理器:vivo S17 Pro现身Geekbench

曝小米13 Ultra欧洲售价超1.1万元:比iPhone 14 Pro、华为P60 Pro都贵_世界今日讯

全球球精选!破首发仅7499元!华硕天选4游戏本配锐龙9与RTX4060:高性价比真香

热点!散了吧!特斯拉车顶维权女车主败诉:刹车失灵观点站不住脚 没任何证据证明

《变形金刚7:超能勇士崛起》超燃特辑出炉:保时捷964街头飞车_热资讯

Netty实战(三)

上市公司实控人离婚140亿归女方,盘点彤程新材投资版图

千元神机!荣耀X50首发骁龙6 Gen1:一亿像素加持

育碧再次背离玩家!《刺客信条:幻景》PC端Steam独不占_世界百事通

一眼假的诈骗短信是骗子智商不够吗?官方:这是极高效率筛选受害者

京东618大促攻略:iPhone 14 Pro系列直降1800元 多会员年卡探底|环球视点

百度文心大模型3.5版要来了!李彦宏:大模型将改变世界 环球热门

屹通新材:5月25日融资买入167.73万元,融资融券余额3877.74万元

统信UOS系统开发笔记(一):国产统信UOS系统搭建开发环境之虚拟机安装

揭秘百度IM消息中台的全量用户消息推送技术改造实践

Python工具箱系列(三十三)

海内外直播源码加密技术保障您的隐私安全 焦点快播

Windows下使用docker部署.Net Core 全球今热点

一天斩获3个冠军!全国花游冠军赛北京队“开门红”-快看点

DXO拍照全球第一!华为P60 Pro新增12GB+256GB版本 6488元

单价6.5亿 想坐吗?国产大飞机C919商业首航来了:5月28日、上海至北京_微头条

神操作 小汽车撞倒闯红灯三轮车后居然直接走了 无责变有责

糟糕!下一轮国内油价调整“由跌转涨”:当前上调75元/吨 今日快讯

牌面!福特CEO称特斯拉不是最大竞争对手 比亚迪才是 环球新消息

环球视讯!UE/虚幻 蓝图实现通过http获取数据(以高德地图API为例)&Json格式数据的读取

标准化考场时间同步系统(网络时钟系统)规划建设应用 每日快播

Java设计模式-策略模式|环球快资讯

焦点要闻:美联储加息预期升温 美元兑日元汇率再度站上140

计算机时代变了 NVIDIA黄仁勋:CPU用得越来越少 GPU才是关键|世界实时

【世界快播报】自研火箭发射8颗卫星 韩国表态已成航天强国:仅中美等7国做到

当前快报:跟比亚迪海豚争场子 五菱云朵8月上市:10万级大五座纯电新宠

焦点观察:优派推出新款27寸4K Mini LED显示器:支持96W反向充 2999元

山东一高速现天价救援费:20公里被收11000元 结局大快人心 观天下

世界热点!Fastjson 很快,但不适合我....

每日观点:干瘦肉炒什么配菜好吃?

一季度垃圾短信投诉5万件 官方揭秘:主要是这10家公司发的

18888元求票 五月天黄牛票涨回去了?29名黄牛被查处|当前简讯

你吃过没?商家回应淄博烧烤降温:再不降温我们也受不了|新消息

世界消息!成都一轿车冲进店铺撞坏16个骨灰盒 现场损坏严重:网友称是大众

遥遥领先!华为分布式存储斩获IO500榜全球第一:Intel看不见尾灯

裘皮是什么皮_裘皮的介绍 天天资讯

触达债务上限日期或在两周后 美债收益率延续上行_环球微头条

国际金融市场早知道:5月26日|焦点热讯

刘强东14年为宿迁投入超200亿!宿迁第一高楼启用 未来3年在当地新招上万人

世界热点评!《英雄联盟手游》今日更新:无限火力升级归来 英雄可达25级

刷新世界纪录!中国吊起142米、3068吨“巨无霸”大塔

选择商品的发布类目方式有_选择一款正确发布的商品

今日要闻!学系统集成项目管理工程师(中项)系列27_10大管理47过程、输入输出工具和技术(2版教材)

今天国内上映!《小美人鱼》来了 口碑解禁观众爱看小黑美人鱼

新概念英语语法词汇练习第一册_关于新概念英语语法词汇练习第一册简介 热点在线

调优圣经:零基础精通Jmeter分布式压测,10Wqps+超高并发 世界快资讯

直接用中文写提示词的Stable Diffusion扩展:sd-prompt-translator发布

上海高架斗气车主或涉嫌什么罪名?专家解读多种可能

天天热门:史上最大涨幅!康宁:所有玻璃基板提价20%

天天头条:让友商恨得牙痒痒的比亚迪宋Pro DM-i冠军版:到底怎么样?

视焦点讯!马斯克吹了三年的锂电之光4680:竟然还不如普通电池!

袋鼠贵为澳大利亚国宝 却每年被合法猎杀几百万只:为啥? 全球即时看

当前快播:宠物经济板块5月25日跌0.66%,狮头股份领跌,主力资金净流出7407.47万元

鼻中隔糜烂用什么药最好_鼻中隔糜烂

当前消息!新仙剑奇侠传之挥剑问情林月如怎么获得 林月如获取方法介绍

药智网数据库 高校(药智网数据库)

沪指险守3200点 外资连续3天撤离!热门题材持续活跃

头条焦点:适合女孩子玩的单机小游戏(有哪些适合女生玩的单机小游戏?)

广州47中好不好_广州市47中高中部的重点班有哪些

记录一次服务器磁盘爆满问题

君爵商务车2023款价格及图片_君爵

环球今日讯!不愧是大哥!RTX 4090游戏本实测性能拉满

环球精选!Win11年度重磅更新Moment 3发布下载:所有Win32程序皆可沙盒运行

全球热讯:iOS 17更新内容少!原来苹果把心思放在这了

2499元起!网友对小米Civi 3定价感到意外

5月25日晚间沪深上市公司重大事项公告最新快递-环球热文

6)表连接

环球快报:铂科新材(300811)5月25日主力资金净买入56.30万元

阿里巴巴否认大裁员:今年需招15000人 每天数千岗位在纳新-天天快看

全球动态:油电同价!比亚迪宋Pro DM-i冠军版入门就71KM 13.58万起

世界资讯:14.3GB/s!PCIe 5.0 SSD第一次满血了 但是必须水冷?

环球观点:有人出18888元求票!五月天黄牛票涨回去了?

安徽男子遇AI诈骗:9秒被骗132万 世界最资讯

广东一熊孩子拿着剪刀减掉女顾客头发 家长赔偿其11500元

深圳市举行普通小汽车增量指标竞价活动 成交价创5年新低

韩国自主研发运载火箭发射升空 计划将其送入550千米高的太阳同步轨道

文心一言 VS 讯飞星火 VS chatgpt (22)-- 算法导论4.2 2题_当前简讯

11-计数器