最新要闻

- 环球简讯:爵士力克国王将湖人挤出附加赛区 迷失盐湖城小萨准三双数据难掩低迷状态

- 入睡妙招!研究表明穿袜子睡觉更助眠

- 全球热消息:AMD Zen4霸气!移动版12核心解锁130W 直追170W桌面12核心

- "周杰伦演唱会门票"登顶微博热搜 14万张秒售罄

- 全球热推荐:今天春分白昼长了!全国春日地图出炉 看看春天到哪了

- 天天热议:汽车界“海底捞服务”!蔚来2023无忧服务发布:11600元/年

- 世界聚焦:重庆不再实行旧车置换:直接给予新车补贴 总计达3000万

- 世界报道:跨界做智能手表 比亚迪回应:消息属实 4月上新

- 对标《原神》!二次元开放世界游戏《鸣潮》开启测试招募

- 每日视点!海关总署:2月下旬以来我国出口用箱量持续增长

- 国产纯电跑车前途K50美国秽土转生:换了名称、LOGO还没变

- 全球最新:40万级领先行业两代 赵长江:腾势N7月销量将轻松破万 抢夺BBA用户

- 【全球速看料】沙县小吃旗舰店包间最低消费300元 网友:吃的完吗?

- 全球头条:英国小镇被巨型老鼠入侵:像猫一样大 悬崖都要被挖塌了

- 天天观天下!广东人睡觉时间全国最晚:“打工人”平均睡眠时长7.5小时

- 一根USB线就能偷走韩系车!现代、起亚已开始免费送车主方向盘锁

广告

手机

iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

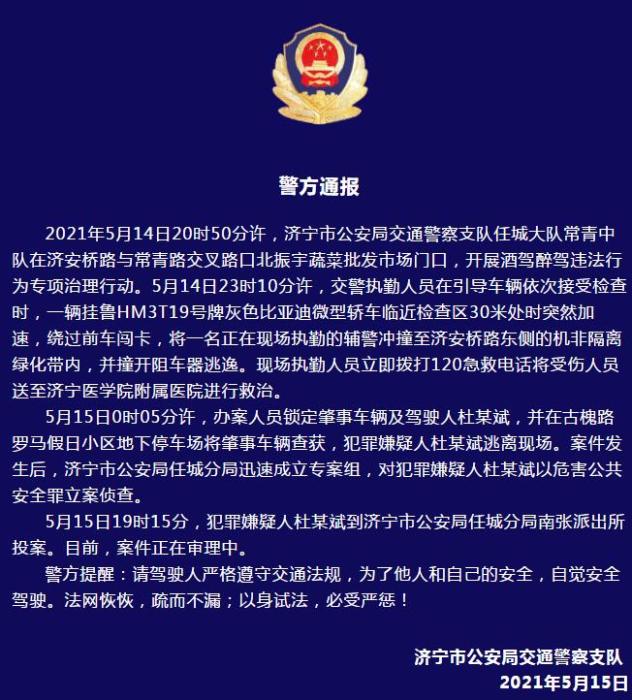

警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

- 警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- 男子被关545天申国赔:获赔18万多 驳回精神抚慰金

- 3天内26名本土感染者,辽宁确诊人数已超安徽

- 广西柳州一男子因纠纷杀害三人后自首

- 洱海坠机4名机组人员被批准为烈士 数千干部群众悼念

家电

对斗破苍穹进行python文本分析

(相关资料图)

(相关资料图)

对斗破苍穹进行python文本分析

用python分析该小说的分词,词频,词云,小说人物出场次数排序等等。

1、分词

对文本进行分词,将分词结果输出到文本文件中。

自己创建一个txt文本文件,形成自定义词库,如下

import jiebaimport reimport string# 使用 load_userdict 方法加载自定义词库jieba.load_userdict("1.txt")with open("doupo.txt","r",encoding="gbk") as f: #读取文件 text = f.read()# 使用正则表达式去掉标点符号和空格text = re.sub("[\s+\.\!\/_,$%^*(+\"\"]+|[+——!,。?、~@#¥%……&*():;《)《》“”()»〔〕-]+", "", text)# 对文本进行分词words = jieba.cut(text)# 去掉无意义的空字符串result = " ".join(word for word in words if word.strip())# 将分词结果保存到txt文档中with open("output.txt", "w", encoding="utf-8") as f: f.write(result)结果显示:

2、词频

import jiebaimport jieba.posseg as psegfrom wordcloud import WordCloudfrom collections import Counterimport matplotlib.pyplot as pltimport rewith open("output.txt","r",encoding="utf-8") as f: #读取文件 text = f.read()# 对文本进行分词words = jieba.lcut(text)# 统计词频word_count = {}for word in words: if len(word) > 1: # 只统计长度大于1的词 word_count[word] = word_count.get(word, 0) + 1# 按照词频排序sorted_word_count = sorted(word_count.items(), key=lambda x: x[1], reverse=True)# 打印结果for word, count in sorted_word_count: print(word, count)结果显示:

由于分词太多就不一一展示

3、绘制词云

import jiebaimport jieba.posseg as psegfrom wordcloud import WordCloudfrom collections import Counterimport matplotlib.pyplot as pltimport rewith open("doupo.txt","r",encoding="gbk") as f: #读取文件 text = f.read()# 对文本进行分词words = jieba.lcut(text)# 统计词频word_count = {}for word in words: if len(word) > 1: # 只统计长度大于1的词 word_count[word] = word_count.get(word, 0) + 1# 按照词频排序sorted_word_count = sorted(word_count.items(), key=lambda x: x[1], reverse=True)#创建词云对象wc = WordCloud( font_path="云峰静龙行书.ttf", background_color= "white", max_words=500, max_font_size=200, width=1000, margin=5, height=800).generate_from_frequencies(word_count)plt.imshow(wc)plt.axis("off")plt.show()结果显示:

4、画饼状图

注:小说前10人物出场次数排序的饼状图

import jiebaimport matplotlib.pyplot as pltfrom collections import Counterimport re# 打开人物姓名词库文件with open("person_names.txt", "r", encoding="utf-8") as f: person_names = f.read().splitlines()# 添加人物姓名词库到结巴分词器jieba.load_userdict("person_names.txt")# 打开文本文件with open("doupo.txt", "r", encoding="gbk") as f: text = f.read()# 对文本进行分词words = jieba.lcut(text)# 统计人物姓名词频name_freq = {}for word in words: if word in person_names: if word in name_freq: name_freq[word] += 1 else: name_freq[word] = 1# 输出人物姓名及其词频#for name, freq in name_freq.items(): #print(name, freq)sorted_dict = sorted(name_freq.items(), key=lambda x: x[1], reverse=True)top_words = sorted_dict[:10] # 取出前 10 个值labels = [word[0] for word in top_words]sizes = [word[1] for word in top_words]# 画图fig, ax = plt.subplots()plt.rcParams["font.sans-serif"] = ["SimHei"] # 设置字体为黑体plt.pie(sizes, labels=labels, autopct="%1.1f%%", startangle=150)plt.rcParams["font.size"] = 16# 调整图形大小fig.set_size_inches(8, 8)# 添加标题plt.title("斗破苍穹人物出场次数饼状图")# 显示图表plt.show()结果显示:

关键词:

-

今头条!IDEA Rebuild项目错误:Information:java: java.lang.AssertionError: Value of x -1

模仿lombok工具,我的enumgen工具写完了。公司的项目emax-rpcapi-list依赖了enumgen后,IDEARebuildProj...

来源: -

-

实时焦点:VsCode 常用好用插件/配置+开发Vue 必装的插件

一、VsCode常用好用插件1、实时刷新网页的插件:LiveServer2、自动保存:不用装插件,在VsCode中设置一...

来源: -

环球简讯:爵士力克国王将湖人挤出附加赛区 迷失盐湖城小萨准三双数据难掩低迷状态

一、爵士力克国王将湖人挤出附加赛区爵士128-120终结国王三连胜。爵士35胜36负超越湖人(35胜37负)升至西...

来源: 今头条!IDEA Rebuild项目错误:Information:java: java.lang.AssertionError: Value of x -1

对斗破苍穹进行python文本分析

实时焦点:VsCode 常用好用插件/配置+开发Vue 必装的插件

环球简讯:爵士力克国王将湖人挤出附加赛区 迷失盐湖城小萨准三双数据难掩低迷状态

入睡妙招!研究表明穿袜子睡觉更助眠

全球热消息:AMD Zen4霸气!移动版12核心解锁130W 直追170W桌面12核心

"周杰伦演唱会门票"登顶微博热搜 14万张秒售罄

dnf机械牛和悲鸣图在哪里?DNF机械牛和悲鸣的门票分别是什么?

雨过天晴一键还原怎么用?怎么删除雨过天晴一键还原?

OA对话框打不开是怎么回事?OA对话框怎么变成普通对话框?

今日最新!脚本编写的一个通用框架

天天速讯:编写高质量c#代码的20个建议

面试常考:C#用两个线程交替打印1-100的五种方法

全球新资讯:Paramiko_Linux

【全球独家】跟着字节AB工具DataTester,5步开启一个实验

英雄联盟自动关闭是什么意思?英雄联盟自动关闭怎么解决?

冒险岛的时空裂缝是什么?冒险岛怎么提升面板?

全球热推荐:今天春分白昼长了!全国春日地图出炉 看看春天到哪了

天天热议:汽车界“海底捞服务”!蔚来2023无忧服务发布:11600元/年

世界聚焦:重庆不再实行旧车置换:直接给予新车补贴 总计达3000万

世界报道:跨界做智能手表 比亚迪回应:消息属实 4月上新

对标《原神》!二次元开放世界游戏《鸣潮》开启测试招募

热点!如果设备不支持vulkan,就用swiftshader,否则就加载系统的vulkan的正确姿势(让程序能够智能的在vulkan-1.dll和libvk_s

【全球快播报】springboot使用easyExcel导出Excel表格以及LocalDateTime时间类型转换问题

《前端serverless 面向全栈的无服务器架构实战》读书笔记

每日视点!海关总署:2月下旬以来我国出口用箱量持续增长

国产纯电跑车前途K50美国秽土转生:换了名称、LOGO还没变

全球最新:40万级领先行业两代 赵长江:腾势N7月销量将轻松破万 抢夺BBA用户

【全球速看料】沙县小吃旗舰店包间最低消费300元 网友:吃的完吗?

全球头条:英国小镇被巨型老鼠入侵:像猫一样大 悬崖都要被挖塌了

OpenGL 图像 lookup 色彩调整

天天时讯:剑指 Offer 07. 重建二叉树(java解题)

为什么Redis不直接使用C语言的字符串?看完直接吊打面试官!

天天观天下!广东人睡觉时间全国最晚:“打工人”平均睡眠时长7.5小时

一根USB线就能偷走韩系车!现代、起亚已开始免费送车主方向盘锁

今日关注:再不发力就晚了!新一代奥迪Q5效果图曝光:内外大变革

当前时讯:沙尘暴黄色预警:北方超10省将迎来大范围沙尘天气

环球热点评!昔日巨头彻底退场!爱普生宣布所有相机明年终止官方服务

环球关注:论文解读TCPN

西部证券:3月20日融资买入1459.71万元,融资融券余额12.72亿元

环球速讯:中国罐头在海外多国热销:成为香饽饽

天天讯息:大反转!南京大学团队推翻美室温超导技术 复刻实验没发现超导现象

天天速看:又一致命真菌爆发:已遍布美国一半以上的州

天天微动态丨OPPO Find X6系列外观公布:拼接设计、后摄巨大

比亚迪汉唐冠军版发布会高能金句感受下 合资燃油车瑟瑟发抖

MAUI Blazor 加载本地图片的解决方案

每日热点:朴素系统优化思维的实践

焦点热文:债券通北向通2月成交规模环比增超三成 政金债跃升为最活跃券种

今日热讯:LCD荣光犹在!iQOO Z7开启预售:1599元起

全球观察:漫威后期制作总裁离职

当前通讯!2022年度个税汇算今起不用预约:多退少补你能退多少

移除雷达传感器后 特斯拉车祸数量上升:车主反映莫名刹车故障

快消息!读C#代码整洁之道笔记02_类、对象和数据结构及编写整洁函数

鲁抗医药:3月20日融资买入477.87万元,融资融券余额2.49亿元

全球速读:今年以来险企“补血”超340亿元 数百亿元补充资本“在路上”

世界观点:国际金融市场早知道:3月21日

铁矿石价格“非理性”上涨 监管层频频发声剑指价格炒作

天天最新:春分迎接春天:昼夜时间等长

2TB硬盘开车价400多 SSD便宜到没朋友:5大巨头流泪数钱

每日速递:读Java性能权威指南(第2版)笔记23_ 性能分析工具

焦点速讯:影响人类文明的“小方块” USB接口进化史

当前资讯!快来!我们发现了藏在新风空调里的“秘密”

当前速看:C++温故补缺(一):引用类型

要闻速递:【Visual Leak Detector】简介

Spotify 畅听全网高品质音乐

焦点热门:GPT-4外逃计划曝光!教授发现它正引诱人类帮助 网友:灭绝之门

天天快看点丨因禽流感爆发 阿根廷已扑杀70余万只禽类!

焦点!27款进口游戏版号获批!《赛马娘》《蔚蓝档案》等改名引热议

你相信吗?每天都有10多万人 学习流浪汉的生存技巧

环球今亮点!15年后 官方发布北京奥运福娃全新手办:五个一套440元

26种死法知乎_26种死法怎么样

天天速讯:织金县鸡场乡:防范电信诈骗,拉响反诈警报

Go 并发编程(一):协程 gorotine、channel、锁

vue和xml复习

Vue——vue2错误处理收集【七】

环球关注:iPhone开始在俄罗斯遭禁用了:不安全!苹果此前已在该国停售

焦点快看:填补空白!中国将首次开启海上二氧化碳封存

C# 探秘如何优雅的终止线程

行人车辆检测与计数系统(Python+YOLOv5深度学习模型+清新界面)

焦点播报:Spring IOC官方文档学习笔记(十四)之ApplicationContext的其他功能

如何上传一个npm包

中债金融终端上线“货币经纪行情”功能

瑞信风波由股及债 此前已有分析师提示“AT1”风险

Wii U和3DS在线游戏商店关闭前:玩家花15万328天买下所有游戏

2022年 全国坐火车的少了36.4%!高铁已达4.2万公里

环球热点!基于深度学习的口罩检测系统(Python+清新界面+数据集)

【环球新视野】自律|坚持,是因为不喜欢

Java内部类笔记整理

全球快资讯丨永磁同步电机驱动系统—相关方向思考

焦点要闻:HTTP 状态码与课程总结

日本东电直播用核污水养鱼 海外网友直言:留着自己吃吧

环球热点评!旗舰级LCD护眼屏+120W独此一款!iQOO Z7发布:1599元

全球热议:6000mAh同档位续航无敌!iQOO Z7x发布:1299元起

便宜卡终于有了!好队友抢跑:RTX 4070/4060来也

9999元 AOC爱攻新款48寸显示器上架:4K OLED屏、138Hz高刷

当前快讯:死亡细胞将登陆Android端

环球快看点丨波动数列

瑞银收购瑞信意外“引爆”AT1债券市场 对冲基金不计成本抛售避险

【世界新视野】抗早泄药物盐酸达泊西汀国内正式上市:效果最好!订单超4000万元

天天通讯!1994《小美人鱼》vs2023《小美人鱼》对比图火了:5月上映 你会看吗?