最新要闻

- 京东客服确认:百亿补贴商品不支持价保!

- 世界消息!“点对点”自动驾驶 红旗发布E702官图:百公里人工接管小于0.5次

- 速讯:2499元 荣耀观影眼镜发布:轻至80g、500nit入眼亮度

- 世界实时:荣耀最强旗舰!一图了解荣耀Magic5至臻版:6699元

- 环球焦点!超薄+四种体验:冈本Okamoto金装系列1.7元/枚大促

- 要闻:拖胆选号怎么玩_拖胆选号

- 每日播报!《消费者报告》公布最不可靠的10款电动车:特斯拉双车上榜

- 微头条丨荣耀Magic 5系列亮相:曲面屏也能拥有直屏体验

- 天天精选!女子算命核桃树挡姻缘 家人将树砍掉:当事人称要相信科学

- 短讯!熬夜后心里咯噔一下要注意:有三大风险

- 全球快讯:浪费人才?酒店回应去一本院校招聘洗碗工:2千工资对应发展空间广

- 环球观热点:努比亚Z50 Ultra缩小系统固件:仅8个不可卸载应用

- 【天天快播报】特斯拉失控、单踏板风波不断 为何还买?吉利李书福:建议大家买国产新能源汽车

- 2月XGP最佳游戏玩家投票:俄罗斯3A大作《原子之心》荣获第一

- 3月6日译名发布:乔治·桑托斯

- 黄鳝吃什么东西

广告

手机

iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

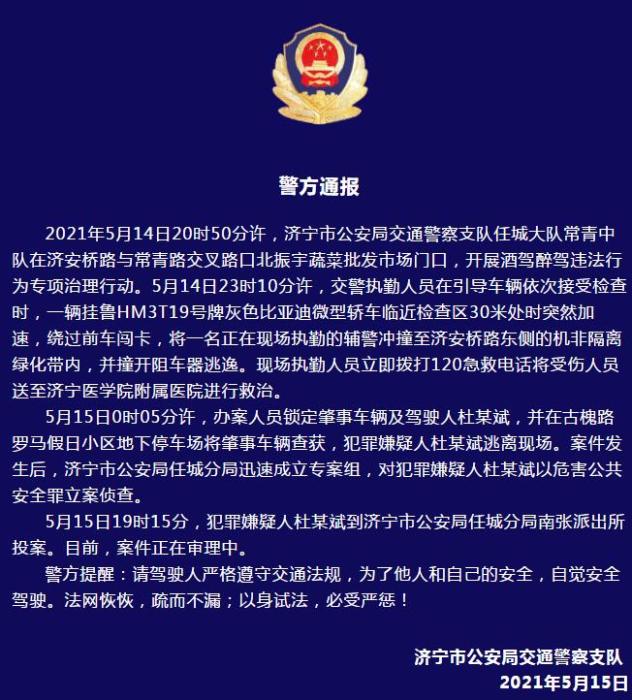

警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

- 警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- 男子被关545天申国赔:获赔18万多 驳回精神抚慰金

- 3天内26名本土感染者,辽宁确诊人数已超安徽

- 广西柳州一男子因纠纷杀害三人后自首

- 洱海坠机4名机组人员被批准为烈士 数千干部群众悼念

家电

焦点信息:基于datax抽取mysql数据到HDFS

(资料图)

(资料图)

一、安装datax

通过https://datax-opensource.oss-cn-hangzhou.aliyuncs.com/202210/datax.tar.gz这个地址下载datax.tar.gz,解压到某个目录,如我的目录/opt/conf/datax-20230301,解压完后会在当前目录下生成datax目录,进入datax目录后的目录如下图所示:之后在datax安装目录下,运行以下命令,赋予执行权限。

sudo chmod -R 755 ./* 二、测试datax是否正确安装

/opt/conf/datax-20230301/datax/bin/datax.py /opt/conf/datax-20230301/datax/job/job.json运行以上命令,看是否能正确启动,启动后运行完结果如下图:如果那个正确运行,说明/opt/conf/datax-20230301/datax/bin/datax.py这个文件的编码不是utf-8,需要重新编码。用我这个替换一下即可正常使用。datax.py

三、编写配置文件

在datax安装目录下的job文件夹,使用以下命令新建配置文件

vim job_air_data_source_mysql_hdfs.json之后将下面的json文件内容拷贝粘贴到刚才打开的文件,保存即可。

{ "job": { "setting": { "speed": { "channel": 3 }, "errorLimit": { "record": 0, "percentage": 0.02 } }, "content": [ { "reader": { "name": "mysqlreader", "parameter": { "username": "root", "password": "root", "column": ["*"], "splitPk": "id", "connection": [ { "table": [ "air_data_source" ], "jdbcUrl": [ "jdbc:mysql://master:3306/air_data" ] } ] } }, "writer": { "name": "hdfswriter", "parameter": { "defaultFS": "hdfs://master:9820", "fileType": "TEXT", "path": "/user/hive/warehouse/air_data.db/air_data_source", "fileName": "air_data_source_202302", "column": [ {"name": "id","type": "STRING"}, {"name": "airlinelogo","type": "STRING"}, {"name": "airlineshortcompany","type": "STRING"}, {"name": "arractcross","type": "STRING"}, {"name": "arracttime","type": "STRING"}, {"name": "arrairport","type": "STRING"}, {"name": "arrcode","type": "STRING"}, {"name": "arrontimerate","type": "STRING"}, {"name": "arrplancross","type": "STRING"}, {"name": "arrplantime","type": "STRING"}, {"name": "arrterminal","type": "STRING"}, {"name": "checkintable","type": "STRING"}, {"name": "checkintablewidth","type": "STRING"}, {"name": "depactcross","type": "STRING"}, {"name": "depacttime","type": "STRING"}, {"name": "depairport","type": "STRING"}, {"name": "depcode","type": "STRING"}, {"name": "depplancross","type": "STRING"}, {"name": "depplantime","type": "STRING"}, {"name": "depterminal","type": "STRING"}, {"name": "flightno","type": "STRING"}, {"name": "flightstate","type": "STRING"}, {"name": "localdate","type": "STRING"}, {"name": "mainflightno","type": "STRING"}, {"name": "shareflag","type": "STRING"}, {"name": "statecolor","type": "STRING"} ], "writeMode": "truncate", "fieldDelimiter": "\u0001", "compress":"GZIP" } } } ] } }四、Hive建数据库、数据表

create database air_data;use air_data;CREATE TABLE `air_data_source`( `id` int COMMENT "主键", `airlinelogo` string COMMENT "航空公司logo", `airlineshortcompany` string COMMENT "航空公司简称", `arractcross` string, `arracttime` string COMMENT "实际起飞时间", `arrairport` string, `arrcode` string, `arrontimerate` string COMMENT "到达准点率", `arrplancross` string, `arrplantime` string COMMENT "计划到达时间", `arrterminal` string, `checkintable` string, `checkintablewidth` string, `depactcross` string, `depacttime` string COMMENT "实际到达时间", `depairport` string COMMENT "到达机场名称", `depcode` string COMMENT "到达机场代码", `depplancross` string, `depplantime` string COMMENT "计划起飞时间", `depterminal` string, `flightno` string COMMENT "航班号", `flightstate` string COMMENT "航班状态", `localdate` string, `mainflightno` string, `shareflag` string, `statecolor` string)COMMENT "航空数据原始表"ROW FORMAT SERDE "org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe"STORED AS INPUTFORMAT "org.apache.hadoop.mapred.TextInputFormat"OUTPUTFORMAT "org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat";运行完以上任务后,接着可以进行数据抽取了。

四、运行任务

在当前目录下执行以下命令:

/opt/conf/datax-20230301/datax/bin/datax.py /opt/conf/datax-20230301/datax/job/job_air_data_source_mysql_hdfs.json 即可正确启动数据同步任务,运行完结果如下:查看HDFS上是否已经有了数据文件,运行一下命令,得到输出。

hadoop fs -ls hdfs://master:9820/user/hive/warehouse/air_data.db/air_data_source至此,利用datax将mysql数据同步到hdfs任务已配置完成。

-

-

焦点信息:基于datax抽取mysql数据到HDFS

一、安装datax通过https: datax-opensource oss-cn-hangzhou aliyuncs com 202210 datax tar gz这个地址下载datax tar

来源: -

-

记录--uni-app中安卓包检查更新、新版本下载、下载进度条显示功能实现

焦点信息:基于datax抽取mysql数据到HDFS

阅读并手撸JS版Naive Ui Admin骨架

京东客服确认:百亿补贴商品不支持价保!

世界消息!“点对点”自动驾驶 红旗发布E702官图:百公里人工接管小于0.5次

速讯:2499元 荣耀观影眼镜发布:轻至80g、500nit入眼亮度

世界实时:荣耀最强旗舰!一图了解荣耀Magic5至臻版:6699元

环球焦点!超薄+四种体验:冈本Okamoto金装系列1.7元/枚大促

要闻:拖胆选号怎么玩_拖胆选号

Django-2

当前观点:【建议收藏】超详细的Canal入门,看这篇就够了!!!

当前快看:3.6 C提高3day

本地硬盘文件映射公网 cpolar轻松做到

顶象中标GovHK香港政府一站通数字化项目

每日播报!《消费者报告》公布最不可靠的10款电动车:特斯拉双车上榜

微头条丨荣耀Magic 5系列亮相:曲面屏也能拥有直屏体验

天天精选!女子算命核桃树挡姻缘 家人将树砍掉:当事人称要相信科学

安装Redis6.2.7主从哨兵集群教程

当前视点!NTP对时服务器(NTP电子时钟)重要参数指标表

焦点速讯:接口安全性问题02——jwt身份验证与授权

短讯!熬夜后心里咯噔一下要注意:有三大风险

全球快讯:浪费人才?酒店回应去一本院校招聘洗碗工:2千工资对应发展空间广

环球观热点:努比亚Z50 Ultra缩小系统固件:仅8个不可卸载应用

【天天快播报】特斯拉失控、单踏板风波不断 为何还买?吉利李书福:建议大家买国产新能源汽车

2月XGP最佳游戏玩家投票:俄罗斯3A大作《原子之心》荣获第一

A/B 实验避坑指南:为什么不建议开 AABB 实验

观速讯丨.NET7依赖注入

3月6日译名发布:乔治·桑托斯

黄鳝吃什么东西

看点:699元 小米米家首款智能钢琴灯发布:雷达感应自动开关灯

天天微资讯!降低水产品检测标准!日本坚决核污水排海 100%移除放射性元素做不到

专家呼吁每天省杯咖啡提前规划养老:背后商业版图显现 网友无语满嘴跑火车

一个摄像头就能让虚拟人唱跳rap:抖音即可玩

有哪些值得收藏的运营思维导图?

环球速看:在工作中最容易犯的3个大忌

环球头条:1-基础入门

每日热门:网络安全(中职组)-B模块:Windows操作系统渗透测试

当前资讯!你也能成为“黑客”高手——趣谈Linux Shell编程语言

每辆都是碰撞测试车 特斯拉全球首创真实碰撞测试系统

当前热点-惊蛰习俗有哪些?专家科普:吃梨、吃“懒龙”了解下

【世界报资讯】33万买奥迪A7L!上汽回应“内购价最高优惠16万”:正严查信息外泄

马斯克:曾对加密货币感兴趣 现在钟情于AI

《数据万象带你玩转视图场景》第一期:avif图片压缩详解

每日热讯!计算两个字符串的相似度

2023年兔年金银币价格表(2023年03月06日)

199元!小米推出米家分体露营灯:Type-C接口 满电亮100小时

高速公路“见缝插针”超车致事故 司机:我莽撞了

每日动态!魅族史上最大规模!魅族20系列发布会定点上海梅赛德斯奔驰文化中心

全球滚动:预计上涨0.16元/升!国内油价将于17日24时起再调整

全球视讯!《卧龙:苍天陨落》捏人系统受盛赞 胸部拉到最大也不违和

关于情侣的四字成语有哪些?关于情侣个性签名大全

专业人才入库证书有什么作用?从专业人才走向管理心得体会

花羊羊是谁的妈妈?花羊羊为什么没和村长在一起?

海麻线是什么东西?海麻线的营养价值及功效

MySQL安装入门第一篇

【SpringBoot】AOP默认的动态代理

前端设计模式——工厂模式

电脑怎么设置休眠模式?休眠和待机有什么区别?

洗衣机不进水是哪里的问题?洗衣机不进水怎么处理?

电脑mac地址怎么更改?电脑mac地址查询方法

网件路由器怎么恢复出厂设置?网件路由器哪款性价比高?

全球头条:鼠标是无法识别的usb设备_鼠标成为无法识别的USB设备 怎么办

苹果笔记本好用吗?苹果笔记本换电池需要多少钱?

百度地图怎么看实时街景?百度地图怎么看历史导航轨迹?

全球短讯!极氪汽车被指销售欺诈 有车主坐在体验店门口集体维权

雷军大会发言:小米汽车预计明年上半年量产 一定把车造好!

极致豪华!长城魏牌蓝山内饰官图发布:35万选它还是理想L8

每日资讯:一文看懂2023年CPU如何选:AMD锐龙7000X3D游戏性能封神

阿里拍卖上线湾流G450公务机!博主:近几年最具性价比的一架

全球观天下!055期暗皇福彩3D预测奖号:组选6码参考

焦点报道:【个人杂谈】大体重程序员如何减肥?(个人总结向)

Python、C++、Swift或任何其他语言会取代Java吗?为什么?

速递!koreanDollLikeness_v10模型下载及使用

# 前端周刊:2023-2 期

环球今亮点!Java Struts2系列的XSS漏洞(S2-002)

天天视点!被雷电瞄准时身体会有预兆:如果在乌云下毛发突然竖起 赶紧躲避

世界热议:全球一半人口2035年可能超重:总量超过40亿

全球资讯:石家庄买一根火腿肠就能免费坐地铁 官方回应:鼓励绿色出行

一本院校招聘会现多家酒店招洗碗工引热议 最低工资2千多:官方回应

焦点报道:又一起!美国一小型飞机坠毁后起火 已致1死2伤

聊一聊如何用SonarQube管理.NET代码质量

语义分割评价指标(Dice coefficient, IoU)

(数据库系统概论|王珊)第十章数据库恢复技术-第一、二节:事务的基本概念和数据库恢复概述

天天通讯!Spring Boot 实现装饰器模式,真香!

观天下!密密麻麻!地震后松毛虫大规模入侵土耳其:场面惊悚 令人不适

广东一特斯拉连撞多车冲毁店门现场视频:网友看完称刹车灯亮了 油门当刹车

世界热文:75岁老人中风 好友发现运动步数为0报医:最终脱离危险

全球首个财务自由大学诞生 美国普林斯顿大学2600亿财富养活自己

大神教你在 Linux 中查看你的时区

世界今头条!“帮忙资金”助基金保壳

当前聚焦:工作人员帮游客捡手机被4只幼虎围观 网友:幸亏是“幼儿园”

全球快看点丨董明珠:中国制造业要想成为世界级 必须在创新上下功夫

摄影师把iPhone塞水下拍美景 咕噜咕噜一阵冒泡 Siri:这把憋气局

全球速读:吴青峰发文请大家别听苏打绿的歌:母带仍被非法利用 还听是助纣为虐

学弈这篇文言文是什么意思

环球快报:读Java性能权威指南(第2版)笔记08_即时编译器中

仅1/3美国人能轻松支付400美元应急费用:信用卡违约率激增

可视化调试某个js对象的属性UI插件 class HTUI

每日速递:一进群就水群?试试这个水群拦截工具

世界快讯:裁员70% 关停两个办公点:自动驾驶卡车公司Embark倒闭边缘