最新要闻

- 热文:华硕全球首秀四频段Wi-Fi 7路由器:峰值下载2.5万兆

- 滚动:惠普新款EliteBook 1040笔记本发布:13代酷睿、2K 120Hz屏

- 天天视点!为什么一个病毒株传着传着就没了?

- Redmi K40S 12+256G顶配版不到1900元:骁龙870和OIS都有

- 各大新能源车企年度KPI出炉 特斯拉未达标 比亚迪称王

- 每日时讯!酷安最火骁龙8系手机诞生!一加11酷安热度第一

- 时隔两年 国美真快乐App重新更名国美

- 焦点要闻:加油被惊喜到!一加11不杀后台:前一天打开的APP第二天还在

- 温子仁恐怖片新作《梅根》 拯救了北美院线一月票房

- 天天热点评!被假货逼疯的劳力士:终于坐不住了

- 全球快资讯:要的就是销量!特斯拉在新加坡优惠近7万

- 全球新资讯:豆瓣9.5高分动画!有家长炮轰《中国奇谭》画风吓哭孩子 网友不乐意了

- 加了国六B汽油 排气管喷水?网友犀利吐槽:我加了拉肚子

- 热讯:峰米S5 Rolling投影仪发布:360度可旋转支架 还能当音箱用

- 世界热推荐:米哈游创始人之一参与打造:国产独立游戏《微光之镜》今日发售

- 即时看!奥迪RS e-tron GT很好 但它仍是大众体系里最拧巴的产品

手机

iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

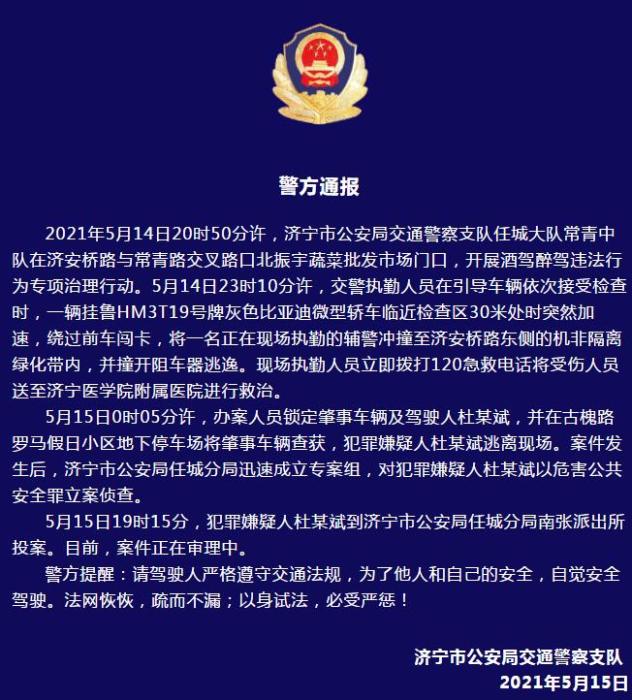

警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

- 警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- 男子被关545天申国赔:获赔18万多 驳回精神抚慰金

- 3天内26名本土感染者,辽宁确诊人数已超安徽

- 广西柳州一男子因纠纷杀害三人后自首

- 洱海坠机4名机组人员被批准为烈士 数千干部群众悼念

家电

当前最新:ceph-3

s3

https://aws.amazon.com/cn/s3/

工作原理

Amazon Simple Storage Service (Amazon S3) 是一种对象存储服务,提供行业领先的可扩展性、数据可用性、安全性和性能。各种规模和行业的客户可以为几乎任何使用案例存储和保护任意数量的数据,例如数据湖、云原生应用程序和移动应用程序。借助高成本效益的存储类和易于使用的管理功能,您可以优化成本、组织数据并配置精细调整过的访问控制,从而满足特定的业务、组织和合规性要求。RadosGW 对象存储网关简介:RadosGW 是对象存储(OSS,Object Storage Service)的一种访问实现方式,RADOS 网关也称为 Ceph 对象网关、RadosGW、RGW,是一种服务,使客户端能够利用标准对象存储API 来访问 Ceph 集群,它支持 AWS S3 和 Swift API,在 ceph 0.8 版本之后使用 Civetweb(https://github.com/civetweb/civetweb) 的 web 服务器来响应 api 请求,客户端使用http/https 协议通过 RESTful API 与 RGW 通信,而 RGW 则通过 librados 与 ceph 集群通信,RGW 客户端通过 s3 或者 swift api 使用 RGW 用户进行身份验证,然后 RGW 网关代表用户利用 cephx 与 ceph 存储进行身份验证。S3 由 Amazon 于 2006 年推出,全称为 Simple Storage Service,S3 定义了对象存储,是对象存储事实上的标准,从某种意义上说,S3 就是对象存储,对象存储就是 S3,它是对象存储市场的霸主,后续的对象存储都是对 S3 的模仿RadosGW 存储特点:通过对象存储网关将数据存储为对象,每个对象除了包含数据,还包含数据自身的元数据。对象通过 Object ID 来检索,无法通过普通文件系统的挂载方式通过文件路径加文件名称操作来直接访问对象,只能通过 API 来访问,或者第三方客户端(实际上也是对 API 的封装)。对象的存储不是垂直的目录树结构,而是存储在扁平的命名空间中,Amazon S3 将这个扁平命名空间称为 bucket,而 swift 则将其称为容器。无论是 bucket 还是容器,都不能再嵌套(在 bucket 不能再包含 bucket)。bucket 需要被授权才能访问到,一个帐户可以对多个 bucket 授权,而权限可以不同。方便横向扩展、快速检索数据。不支持客户端挂载,且需要客户端在访问的时候指定文件名称。不是很适用于文件过于频繁修改及删除的场景。ceph 使用 bucket 作为存储桶(存储空间),实现对象数据的存储和多用户隔离,数据存储在bucket 中,用户的权限也是针对 bucket 进行授权,可以设置用户对不同的 bucket 拥有不同的权限,以实现权限管理。bucket 特性:存储空间(bucket)是用于存储对象(Object)的容器,所有的对象都必须隶属于某个存储空间,可以设置和修改存储空间属性用来控制地域、访问权限、生命周期等,这些属性设置直接作用于该存储空间内所有对象,因此可以通过灵活创建不同的存储空间来完成不同的管理功能。同一个存储空间的内部是扁平的,没有文件系统的目录等概念,所有的对象都直接隶属于其对应的存储空间。每个用户可以拥有多个存储空间存储空间的名称在 OSS 范围内必须是全局唯一的,一旦创建之后无法修改名称。存储空间内部的对象数目没有限制。bucket 命名规范:https://docs.amazonaws.cn/AmazonS3/latest/userguide/bucketnamingrules.html只能包括小写字母、数字和短横线(-)。必须以小写字母或者数字开头和结尾。长度必须在 3-63 字节之间。存储桶名称不能使用用 IP 地址格式。Bucket 名称必须全局唯一。radosgw 架构图radosgw 逻辑图:对象存储访问对比:Amazon S3:提供了 user、bucket 和 object 分别表示用户、存储桶和对象,其中 bucket隶属于 user,可以针对 user 设置不同 bucket 的名称空间的访问权限,而且不同用户允许访问相同的 bucket。OpenStack Swift:提供了 user、container 和 object 分别对应于用户、存储桶和对象,不过它还额外为 user提供了父级组件 account,account 用于表示一个项目或租户(OpenStack用户),因此一个 account 中可包含一到多个 user,它们可共享使用同一组 container,并为container 提供名称空间。RadosGW:提供了 user、subuser、bucket 和 object,其中的 user 对应于 S3 的 user,而subuser 则对应于 Swift 的 user,不过 user 和 subuser 都不支持为 bucket 提供名称空间,因此,不同用户的存储桶也不允许同名;不过,自 Jewel 版本起,RadosGW 引入了 tenant(租户)用于为 user 和 bucket 提供名称空间,但它是个可选组件,RadosGW 基于 ACL为不同的用户设置不同的权限控制,如:Read 读权限Write 写权限Readwrite 读写权限full-control 全部控制权限部署 RadosGW 服务:将 ceph-mgr1、ceph-mgr2 服务器部署为高可用的 radosGW 服务安装 radosgw 服务并初始化:Ubunturoot@ceph-mgr1:~# apt install radosgwCentos:[root@ceph-mgr1 ~]# yum install ceph-radosgw[root@ceph-mgr2 ~]# yum install ceph-radosgw启动之后会监听7480root@ceph-mgr2:~# apt install radosgw

(资料图)

(资料图)

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy rgw create ceph-mgr2

root@ceph-client:~# apt install keepalived

root@ceph-client:~# cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

root@ceph-client:~# apt install haproxy

root@ceph-client:~# cat /etc/haproxy/haproxy.cfg

访问VIP

通过域名访问

验证 radosgw 服务状态:验证 radosgw 服务进程:radosgw 的存储池类型:cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool ls查看默认 radosgw 的存储池信息:cephadmin@ceph-deploy:~/ceph-cluster$ radosgw-admin zone get --rgw-zone=default --rgw-zonegroup=default (获取默认空间的数据)#rgw pool 信息:.rgw.root: 包含 realm(领域信息),比如 zone 和 zonegroup。default.rgw.log: 存储日志信息,用于记录各种 log 信息。default.rgw.control: 系统控制池,在有数据更新时,通知其它 RGW 更新缓存。default.rgw.meta: 元数据存储池,通过不同的名称空间分别存储不同的 rados 对象,这些名称空间包括⽤⼾UID 及其 bucket 映射信息的名称空间 users.uid、⽤⼾的密钥名称空间users.keys、⽤⼾的 email 名称空间 users.email、⽤⼾的 subuser 的名称空间 users.swift,以及 bucket 的名称空间 root 等。default.rgw.buckets.index: 存放 bucket 到 object 的索引信息。default.rgw.buckets.data: 存放对象的数据。default.rgw.buckets.non-ec #数据的额外信息存储池default.rgw.users.uid: 存放用户信息的存储池。default.rgw.data.root: 存放 bucket 的元数据,结构体对应 RGWBucketInfo,比如存放桶名、桶 ID、data_pool 等。cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool get default.rgw.buckets.data crush_ruleError ENOENT: unrecognized pool "default.rgw.buckets.data" 没有权限查看

查看默认默认的副本数

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool get default.rgw.buckets.data sizeError ENOENT: unrecognized pool "default.rgw.buckets.data"

默认的 pg 数量cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd pool get default.rgw.buckets.data pgp_numError ENOENT: unrecognized pool "default.rgw.buckets.data"

RGW 存储池功能:cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd lspools验证 RGW zone 信息:

cephadmin@ceph-deploy:~/ceph-cluster$ radosgw-admin zone get --rgw-zone=default

访问 radosgw 服务:radosgw http 高可用:自定义 http 端口:配置文件可以在 ceph deploy 服务器修改然后统一推送,或者单独修改每个 radosgw 服务器的配置为统一配置,然后重启 RGW 服务。https://docs.ceph.com/en/latest/radosgw/frontends/[root@ceph-mgr2 ~]# vim /etc/ceph/ceph.conf#在最后面添加针对当前节点的自定义配置如下:[client.rgw.ceph-mgr2]rgw_host = ceph-mgr2rgw_frontends = civetweb port=9900#重启服务[root@ceph-mgr2 ~]# systemctl restart ceph-radosgw@rgw.ceph-mgr2.servicehapoxy也需要要改端口并重启radosgw https:在 rgw 节点生成签名证书并配置 radosgw 启用 SSL自签名证书:root@ceph-mgr2:~# cd /etc/ceph/root@ceph-mgr2:/etc/ceph# mkdir certsroot@ceph-mgr2:/etc/ceph# cd certs/root@ceph-mgr2:/etc/ceph/certs# openssl genrsa -out civetweb.key 2048Generating RSA private key, 2048 bit long modulus (2 primes)............+++++.....+++++e is 65537 (0x010001)

root@ceph-mgr2:/etc/ceph/certs# cd /rootroot@ceph-mgr2:~# openssl rand -writerand .rndroot@ceph-mgr2:~# cd -/etc/ceph/certsroot@ceph-mgr2:/etc/ceph/certs# openssl req -new -x509 -key civetweb.key -out civetweb.crt -subj "/CN=rgw.awem.com"

root@ceph-mgr2:/etc/ceph/certs# cat civetweb.key civetweb.crt > civetweb.pem

SSL 配置:root@ceph-mgr2:/etc/ceph/certs# vim /etc/ceph/ceph.conf[client.rgw.ceph-mgr2]rgw_host = ceph-mgr2rgw_frontends = "civetweb port=9900+9443s ssl_certificate=/etc/ceph/certs/civetweb.pem"

root@ceph-mgr2:/etc/ceph/certs# systemctl restart ceph-radosgw@rgw.ceph-mgr2.service

同步rgw证书

root@ceph-mgr2:/etc/ceph/certs# vim /etc/ceph/ceph.conf

root@ceph-mgr2:/etc/ceph# scp ceph.conf 10.4.7.137:/etc/ceph

[client.rgw.ceph-mgr1]rgw_host = ceph-mgr1rgw_frontends = "civetweb port=9900+9443s ssl_certificate=/etc/ceph/certs/civetweb.pem"

[client.rgw.ceph-mgr2]rgw_host = ceph-mgr2rgw_frontends = "civetweb port=9900+9443s ssl_certificate=/etc/ceph/certs/civetweb.pem"

root@ceph-mgr2:/etc/ceph/certs# scp * 10.4.7.137:/etc/ceph/certs

root@ceph-client:~# vi /etc/haproxy/haproxy.cfg

root@ceph-mgr2:/etc/ceph# cat ceph.conf

root@ceph-mgr2:/etc/ceph# mkdir -p /var/log/radosgw/

root@ceph-mgr2:/etc/ceph# chown ceph.ceph /var/log/radosgw/ -R

root@ceph-mgr2:/etc/ceph# curl -k https://10.4.7.138:9443

客户端(s3cmd)测试数据读写:https://github.com/s3tools/s3cmdroot@ceph-mgr2:/etc/ceph# vi ceph.confroot@ceph-mgr2:/etc/ceph# systemctl restart ceph-radosgw@rgw.ceph-mgr2.service

root@ceph-mgr1:/etc/ceph# vi ceph.conf

root@ceph-mgr1:/etc/ceph# systemctl restart ceph-radosgw@rgw.ceph-mgr1.service

创建 RGW 账户: 因为s3cmd是客户端工具需要key 创建用户会生成keycephadmin@ceph-deploy:~/ceph-cluster$ radosgw-admin user create --uid=”user1” --display-name="user1""access_key": "RPYI716AKE8KPAPGYCZP", "secret_key": "fzueWA7SgyZZY70bAia6VjEfkPIXrMPDDBNRVSsR"

安装 s3cmd 客户端:cephadmin@ceph-deploy:~/ceph-cluster$ apt-cache madison s3cmdcephadmin@ceph-deploy:~/ceph-cluster$ sudo apt install s3cmdcephadmin@ceph-deploy:~/ceph-cluster$ s3cmd --help配置 s3cmd 客户端执行环境:s3cmd 客户端添加域名解析:cephadmin@ceph-deploy:~/ceph-cluster$ sudo vim /etc/hosts10.4.7.138 rgw.awen.com 解析在rgw服务器交互式的方式生成文件放在家目录root@ceph-deploy:~# s3cmd --configurecephadmin@ceph-deploy:~/ceph-cluster$ s3cmd --configure

Enter new values or accept defaults in brackets with Enter.Refer to user manual for detailed description of all options.

Access key and Secret key are your identifiers for Amazon S3. Leave them empty for using the env variables.Access Key: RPYI716AKE8KPAPGYCZPSecret Key: fzueWA7SgyZZY70bAia6VjEfkPIXrMPDDBNRVSsRDefault Region [US]:

Use "s3.amazonaws.com" for S3 Endpoint and not modify it to the target Amazon S3.S3 Endpoint [s3.amazonaws.com]: rgw.awen.com:9900

Use "%(bucket)s.s3.amazonaws.com" to the target Amazon S3. "%(bucket)s" and "%(location)s" vars can be usedif the target S3 system supports dns based buckets.DNS-style bucket+hostname:port template for accessing a bucket [%(bucket)s.s3.amazonaws.com]: rgw.awen.com:9900/%(bucket)

Encryption password is used to protect your files from readingby unauthorized persons while in transfer to S3Encryption password: Path to GPG program [/usr/bin/gpg]:

When using secure HTTPS protocol all communication with Amazon S3servers is protected from 3rd party eavesdropping. This method isslower than plain HTTP, and can only be proxied with Python 2.7 or newerUse HTTPS protocol [Yes]: No

On some networks all internet access must go through a HTTP proxy.Try setting it here if you can"t connect to S3 directlyHTTP Proxy server name:

New settings: Access Key: RPYI716AKE8KPAPGYCZP Secret Key: fzueWA7SgyZZY70bAia6VjEfkPIXrMPDDBNRVSsR Default Region: US S3 Endpoint: rgw.awen.com:9900 DNS-style bucket+hostname:port template for accessing a bucket: rgw.awen.com:9900/%(bucket) Encryption password: Path to GPG program: /usr/bin/gpg Use HTTPS protocol: False HTTP Proxy server name: HTTP Proxy server port: 0

Test access with supplied credentials? [Y/n] yPlease wait, attempting to list all buckets...Success. Your access key and secret key worked fine :-)

Now verifying that encryption works...Not configured. Never mind.

Save settings? [y/N] yConfiguration saved to "/home/cephadmin/.s3cfg"

Configuration saved to "/home/cephadmin/.s3cfg"

验证认证文件:cephadmin@ceph-deploy:~/ceph-cluster$ cat /home/cephadmin/.s3cfg[default]access_key = RPYI716AKE8KPAPGYCZPaccess_token = add_encoding_exts = add_headers = bucket_location = USca_certs_file = cache_file = check_ssl_certificate = Truecheck_ssl_hostname = Truecloudfront_host = cloudfront.amazonaws.comdefault_mime_type = binary/octet-streamdelay_updates = Falsedelete_after = Falsedelete_after_fetch = Falsedelete_removed = Falsedry_run = Falseenable_multipart = Trueencoding = UTF-8encrypt = Falseexpiry_date = expiry_days = expiry_prefix = follow_symlinks = Falseforce = Falseget_continue = Falsegpg_command = /usr/bin/gpggpg_decrypt = %(gpg_command)s -d --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)sgpg_encrypt = %(gpg_command)s -c --verbose --no-use-agent --batch --yes --passphrase-fd %(passphrase_fd)s -o %(output_file)s %(input_file)sgpg_passphrase = guess_mime_type = Truehost_base = rgw.awen.com:9900host_bucket = rgw.awen.com:9900/%(bucket)human_readable_sizes = Falseinvalidate_default_index_on_cf = Falseinvalidate_default_index_root_on_cf = Trueinvalidate_on_cf = Falsekms_key = limit = -1limitrate = 0list_md5 = Falselog_target_prefix = long_listing = Falsemax_delete = -1mime_type = multipart_chunk_size_mb = 15multipart_max_chunks = 10000preserve_attrs = Trueprogress_meter = Trueproxy_host = proxy_port = 0put_continue = Falserecursive = Falserecv_chunk = 65536reduced_redundancy = Falserequester_pays = Falserestore_days = 1restore_priority = Standardsecret_key = fzueWA7SgyZZY70bAia6VjEfkPIXrMPDDBNRVSsRsend_chunk = 65536server_side_encryption = Falsesignature_v2 = Falsesignurl_use_https = Falsesimpledb_host = sdb.amazonaws.comskip_existing = Falsesocket_timeout = 300stats = Falsestop_on_error = Falsestorage_class = urlencoding_mode = normaluse_http_expect = Falseuse_https = Falseuse_mime_magic = Trueverbosity = WARNINGwebsite_endpoint = http://%(bucket)s.s3-website-%(location)s.amazonaws.com/website_error = website_index = index.html

命令行客户端 s3cmd 验证数据上传:创建BUCKETcephadmin@ceph-deploy:~/ceph-cluster$ s3cmd mb s3://mageducephadmin@ceph-deploy:~/ceph-cluster$ s3cmd mb s3://csscephadmin@ceph-deploy:~/ceph-cluster$ s3cmd la上传数据cephadmin@ceph-deploy:~/ceph-cluster$ s3cmd put 2609178_linux.jpg s3://imagesupload: "2609178_linux.jpg" -> "s3://images/2609178_linux.jpg" [1 of 1] 23839 of 23839 100% in 0s 1306.78 kB/s done

验证数据

cephadmin@ceph-deploy:~/ceph-cluster$ s3cmd ls s3://css2023-01-08 04:27 23839 s3://css/2609178_linux.jpg

验证下载文件cephadmin@ceph-deploy:~/ceph-cluster$ s3cmd get s3://images/awen/2609178_linux.jpg /tmp/download: "s3://images/awen/2609178_linux.jpg" -> "/tmp/2609178_linux.jpg" [1 of 1] 23839 of 23839 100% in 0s 2.00 MB/s done

删除文件cephadmin@ceph-deploy:~/ceph-cluster$ s3cmd rm s3://images/awen/1.jdgdelete: "s3://images/awen/1.jdg"cephadmin@ceph-deploy:~/ceph-cluster$ s3cmd ls s3://images/awen/2023-01-08 05:05 23839 s3://images/awen/2609178_linux.jpg

cephadmin@ceph-deploy:~/ceph-cluster$ s3cmd rb s3://images/Bucket "s3://images/" removed

基于Nginx+RGW的动静分离及短视频案例

cephadmin@ceph-deploy:~/ceph-cluster$ s3cmd info s3://magedu

cephadmin@ceph-deploy:~/ceph-cluster$ s3cmd mb s3://videoBucket "s3://video/" createdcephadmin@ceph-deploy:~/ceph-cluster$ vi video-bucket_policy

{ "Version": "2012-10-17", "Statement": [{ "Effect": "Allow", "Principal": "*", "Action": "s3:GetObject", "Resource": [ "arn:aws:s3:::mybucket/*" ] }]}cephadmin@ceph-deploy:~/ceph-cluster$ s3cmd setpolicy video-bucket_policy s3://mybuckets3://mybucket/: Policy updated

cephadmin@ceph-deploy:~/ceph-cluster$ s3cmd put 1.jpg s3://mybucket/upload: "1.jpg" -> "s3://mybucket/1.jpg" [1 of 1] 23839 of 23839 100% in 0s 1055.36 kB/s done

cephadmin@ceph-deploy:~/ceph-cluster$ vi video-bucket_policy{ "Version": "2012-10-17", "Statement": [{ "Effect": "Allow", "Principal": "*", "Action": "s3:GetObject", "Resource": [ "arn:aws:s3:::video/*" ] }]}应用授权

cephadmin@ceph-deploy:~/ceph-cluster$ s3cmd setpolicy video-bucket_policy s3://video

上传视频

cephadmin@ceph-deploy:~/ceph-cluster$ s3cmd put 1.mp4 s3://videoupload: "1.mp4" -> "s3://video/1.mp4" [part 1 of 2, 15MB] [1 of 1] 15728640 of 15728640 100% in 8s 1789.92 kB/s doneupload: "1.mp4" -> "s3://video/1.mp4" [part 2 of 2, 9MB] [1 of 1] 9913835 of 9913835 100% in 0s 24.97 MB/s donecephadmin@ceph-deploy:~/ceph-cluster$ s3cmd put 2.mp4 s3://videoupload: "2.mp4" -> "s3://video/2.mp4" [1 of 1] 2463765 of 2463765 100% in 0s 25.01 MB/s donecephadmin@ceph-deploy:~/ceph-cluster$ s3cmd put 3.mp4 s3://videoupload: "3.mp4" -> "s3://video/3.mp4" [1 of 1] 591204 of 591204 100% in 0s 10.75 MB/s donecephadmin@ceph-deploy:~/ceph-cluster$ s3cmd ls s3://video2023-01-08 08:02 25642475 s3://video/1.mp42023-01-08 08:02 2463765 s3://video/2.mp42023-01-08 08:02 591204 s3://video/3.mp4

查看bucket信息

cephadmin@ceph-deploy:~/ceph-cluster$ s3cmd info s3://video

cephadmin@ceph-deploy:~/ceph-cluster$ sudo chown -R cephadmin.cephadmin 1.mp4 2.mp4 3.mp4

配置反向代理

root@ceph-client-1:~# apt install iproute2 ntpdate tcpdump telnet traceroute nfs-kernel-server nfs-common lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute gcc openssh-server lrzsz tree openssl libssl-dev libpcre3 libpcre3-dev zlib1g-dev ntpdate tcpdump telnet traceroute iotop unzip zip

root@ceph-client-1:~# cd /usr/local/src/root@ceph-client-1:/usr/local/src# wget https://nginx.org/download/nginx-1.22.0.tar.gz

root@ceph-client-1:/usr/local/src# tar -xzvf nginx-1.22.0.tar.gz

root@ceph-client-1:/usr/local/src# cd nginx-1.22.0/root@ceph-client-1:/usr/local/src/nginx-1.22.0# mkdir -p /apps/nginx

root@ceph-client-1:/usr/local/src/nginx-1.22.0# ./configure --prefix=/apps/nginx \> 9 --user=nginx \> 10 --group=nginx \> 11 --with-http_ssl_module \> 12 --with-http_v2_module \> 13 --with-http_realip_module \> 14 --with-http_stub_status_module \> 15 --with-http_gzip_static_module \> 16 --with-pcre \> 17 --with-stream \> 18 --with-stream_ssl_module \> 19 --with-stream_realip_module

root@ceph-client-1:/usr/local/src/nginx-1.22.0# make && make install

root@ceph-client-1:/apps/nginx/conf# cat nginx.conf

user root;worker_processes 1;events { worker_connections 1024;}http { include mime.types; default_type application/octet-stream; sendfile on; keepalive_timeout 65;upstream video { server 10.4.7.138:9900; server 10.4.7.137:9900; }

server { listen 80; server_name rgw.ygc.cn;proxy_buffering off; proxy_set_header Host $host; proxy_set_header X-Forwarded-For $remote_addr;

location / { root html; index index.html index.htm; } location ~* \.(mp4|avi)$ { proxy_pass http://video; } error_page 500 502 503 504 /50x.html; location = /50x.html { root html; } }}root@ceph-client-1:/apps/nginx/conf# /apps/nginx/sbin/nginx -tnginx: the configuration file /apps/nginx/conf/nginx.conf syntax is oknginx: configuration file /apps/nginx/conf/nginx.conf test is successfulroot@ceph-client-1:/apps/nginx/conf# /apps/nginx/sbin/nginx

root@ceph-client:~# apt install tomcat9

root@ceph-client:~# cd /var/lib/tomcat9/webapps/ROOT

root@ceph-client:/var/lib/tomcat9/webapps/ROOT# mkdir approot@ceph-client:/var/lib/tomcat9/webapps/ROOT# cd app/root@ceph-client:/var/lib/tomcat9/webapps/ROOT/app# echo "

Hello Java APP

" >index.htmlroot@ceph-client:/var/lib/tomcat9/webapps/ROOT# mkdir app2

root@ceph-client:/var/lib/tomcat9/webapps/ROOT/app2# vi index.jsp java app

root@ceph-client:/var/lib/tomcat9/webapps/ROOT/app2# systemctl restart tomcat9

root@ceph-client-1:/apps/nginx/conf# vi nginx.conf

upstream tomcat { server 10.4.7.132:8080; }

location /app2 { proxy_pass http://tomcat; }

启用 dashboard 插件:https://docs.ceph.com/en/mimic/mgr/https://docs.ceph.com/en/latest/mgr/dashboard/https://packages.debian.org/unstable/ceph-mgr-dashboard #15 版本有依赖需要单独解决Ceph mgr 是一个多插件(模块化)的组件,其组件可以单独的启用或关闭,以下为在ceph-deploy 服务器操作:新版本需要安装 dashboard,而且必须安装在 mgr 节点,否则报错如下:The following packages have unmet dependencies:ceph-mgr-dashboard : Depends: ceph-mgr (= 15.2.13-1~bpo10+1) but it is not going tobe installedE: Unable to correct problems, you have held broken packages.root@ceph-mgr1:~# apt-cache madison ceph-mgr-dashboardroot@ceph-mgr1:~# apt install ceph-mgr-dashboard

#查看帮助root@ceph-mgr1:~# ceph mgr module -h

#列出所有模块cephadmin@ceph-deploy:~/ceph-cluster$ ceph mgr module ls#启用模块cephadmin@ceph-deploy:~/ceph-cluster$ ceph mgr module enable dashboard模块启用后还不能直接访问,需要配置关闭 SSL 或启用 SSL 及指定监听地址。启用 dashboard 模块:Ceph dashboard 在 mgr 节点进行开启设置,并且可以配置开启或者关闭 SSL,如下:#关闭 SSLcephadmin@ceph-deploy:~/ceph-cluster$ ceph config set mgr mgr/dashboard/ssl false#指定 dashboard 监听地址cephadmin@ceph-deploy:~/ceph-cluster$ ceph config set mgr mgr/dashboard/ceph-mgr1/server_addr 10.4.7.137#指定 dashboard 监听端口cephadmin@ceph-deploy:~/ceph-cluster$ ceph config set mgr mgr/dashboard/ceph-mgr1/server_port 9009需要等一会儿还没起来 这里重启了一下mgr服务root@ceph-mgr1:~# systemctl restart ceph-mgr@ceph-mgr1.service第一次启用 dashboard 插件需要等一段时间(几分钟),再去被启用的节点验证。如果有以下报错:Module "dashboard" has failed: error("No socket could be created",)需要检查 mgr 服务是否正常运行,可以重启一遍 mgr 服务dashboard 访问验证:cephadmin@ceph-deploy:~/ceph-cluster$ touch pass.txtcephadmin@ceph-deploy:~/ceph-cluster$ echo "12345678" > pass.txtcephadmin@ceph-deploy:~/ceph-cluster$ ceph dashboard set-login-credentials awen -i pass.txt

********************************************************************************* WARNING: this command is deprecated. ****** Please use the ac-user-* related commands to manage users. *********************************************************************************

Username and password updated

dashboard SSL:如果要使用 SSL 访问。则需要配置签名证书。证书可以使用 ceph 命令生成,或是 opessl命令生成。https://docs.ceph.com/en/latest/mgr/dashboard/ceph 自签名证书:#生成证书:[cephadmin@ceph-deploy ceph-cluster]$ ceph dashboard create-self-signed-cert#启用 SSL:[cephadmin@ceph-deploy ceph-cluster]$ ceph config set mgr mgr/dashboard/ssl true#查看当前 dashboard 状态:root@ceph-mgr1:~# systemctl restart ceph-mgr@ceph-mgr1root@ceph-mgr2:~# systemctl restart ceph-mgr@ceph-mgr2cephadmin@ceph-deploy:~/ceph-cluster$ ceph mgr services{ "dashboard": "https://10.4.7.137:8443/"}

通过 prometheus 监控 ceph node 节点:https://prometheus.io/部署 prometheus:root@ceph-mgr2:~# mkdir /apps && cd /appsroot@ceph-mgr2:/apps# cd /usr/local/src/

root@ceph-mgr2:/usr/local/src# tar -xzvf prometheus-server-2.40.5-onekey-install.tar.gz

root@ceph-mgr2:/usr/local/src# bash prometheus-install.sh

root@ceph-mgr2:/usr/local/src# systemctl status prometheus

访问prometheus

部署 node_exporter

个节点都要安装node_exporter

各 node 节点安装 node_exporter

root@ceph-node1:~# cd /usr/local/srcroot@ceph-node1:/usr/local/src# tar xf node-exporter-1.5.0-onekey-install.tar.gz

root@ceph-node1:/usr/local/src# bash node-exporter-1.5.0-onekey-install.shroot@ceph-mgr2:/apps/prometheus# vi prometheus.yml- job_name: "ceph-node-data" static_configs: - targets: ["10.4.7.139:9100","10.4.7.140:9100","10.4.7.141:9100","10.4.7.142:9100"]

通过 prometheus 监控 ceph 服务:Ceph manager 内部的模块中包含了 prometheus 的监控模块,并监听在每个 manager 节点的 9283 端口,该端口用于将采集到的信息通过 http 接口向 prometheus 提供数据。https://docs.ceph.com/en/mimic/mgr/prometheus/?highlight=prometheus启用 prometheus 监控模块:cephadmin@ceph-deploy:~/ceph-cluster$ ceph mgr module enable prometheusroot@ceph-client:~# vi /etc/haproxy/haproxy.cfg

listen ceph-prometheus-9283 bind 10.4.7.111:9283 mode tcp server rgw1 10.4.7.137:9283 check inter 2s fall 3 rise 3 server rgw2 10.4.7.138:9283 check inter 2s fall 3 rise 3

root@ceph-client:~# systemctl restart haproxy.service

访问VIP

root@ceph-mgr2:/apps/prometheus# vi prometheus.yml

- job_name: "ceph-cluster-data" static_configs: - targets: ["10.4.7.111:9283"]

root@ceph-mgr2:/apps/prometheus# systemctl restart prometheus.service

导入模板

- ceph OSD

- https://grafana.com/grafana/dashboards/5336-ceph-osd-single/

ceph-cluster

https://grafana.com/grafana/dashboards/2842-ceph-cluster/

自定义ceph crush运行图实现基于HDD和SSD磁盘实现数据冷热数据分类存储

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd df

weight 表示设备(device)的容量相对值,比如 1TB 对应 1.00,那么 500G 的 OSD 的 weight就应该是 0.5,weight 是基于磁盘空间分配 PG 的数量,让 crush 算法尽可能往磁盘空间大的 OSD 多分配 PG.往磁盘空间小的 OSD 分配较少的 PGcephadmin@ceph-deploy:~/ceph-cluster$ ceph osd crush reweight --help

osd crush reweight

cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd crush reweight osd.3 0.0003reweighted item id 3 name "osd.3" to 0.0003 in crush map

业务低峰去调 涉及数据平衡操作

Reweight 参数的目的是重新平衡 ceph 的 CRUSH 算法随机分配的 PG,默认的分配是概率上的均衡,即使 OSD 都是一样的磁盘空间也会产生一些 PG 分布不均匀的情况,此时可以通过调整 reweight 参数,让 ceph 集群立即重新平衡当前磁盘的 PG,以达到数据均衡分布的目的,REWEIGHT 是 PG 已经分配完成,要在 ceph 集群重新平衡 PG 的分布。修改 REWEIGHT 并验证:OSD 的 REWEIGHT 的值默认为 1,值可以调整,范围在 0~1 之间,值越低 PG 越小,如果调整了任何一个 OSD 的 REWEIGHT 值,那么 OSD 的 PG 会立即和其它 OSD 进行重新平衡,即数据的重新分配,用于当某个 OSD 的 PG 相对较多需要降低其 PG 数量的场景。cephadmin@ceph-deploy:~/ceph-cluster$ ceph osd reweight 2 0.9reweighted osd.2 to 0.9 (e666)

pg数量减小

以上是动态调整osd种pg的分布,调整数据的平衡

crush运行图是放在mon的 所以要用工具把mon的数据(二进制)导出来

把二进制文件转成文本

再去通过vim调整 -自定义crush规则

把文本文件转换成二进制文件

把二进制文件导入mon

导出 crush 运行图:注:导出的 crush 运行图为二进制格式,无法通过文本编辑器直接打开,需要使用 crushtool工具转换为文本格式后才能通过 vim 等文本编辑宫工具打开和编辑。cephadmin@ceph-deploy:~$ mkdir data导出数据 为二进制文件cephadmin@ceph-deploy:~/data$ ceph osd getcrushmap -o ./crushmap-v1cephadmin@ceph-deploy:~/data$ file crushmap-v1 crushmap-v1: GLS_BINARY_LSB_FIRST

将运行图转换为文本:导出的运行图不能直接编辑,需要转换为文本格式再进行查看与编辑安装crushtoolcephadmin@ceph-deploy:~/data$ sudo apt install ceph-basecephadmin@ceph-deploy:~/data$ crushtool -d crushmap-v1# begin crush maptunable choose_local_tries 0tunable choose_local_fallback_tries 0tunable choose_total_tries 50tunable chooseleaf_descend_once 1tunable chooseleaf_vary_r 1tunable chooseleaf_stable 1tunable straw_calc_version 1tunable allowed_bucket_algs 54

# devicesdevice 0 osd.0 class hdddevice 1 osd.1 class hdddevice 2 osd.2 class hdddevice 3 osd.3 class hdd

# typestype 0 osdtype 1 hosttype 2 chassistype 3 racktype 4 rowtype 5 pdutype 6 podtype 7 roomtype 8 datacentertype 9 zonetype 10 regiontype 11 root

# end crush map

导出文件

cephadmin@ceph-deploy:~/data$ crushtool -d crushmap-v1 -o crushmap-v1.txt

擦除ssd磁盘

cephadmin@ceph-deploy:~/ceph-cluster$ceph-deploy disk list ceph-node1cephadmin@ceph-deploy:~/ceph-cluster$ceph-deploy disk list ceph-node2cephadmin@ceph-deploy:~/ceph-cluster$ceph-deploy disk list ceph-node3cephadmin@ceph-deploy:~/ceph-cluster$ceph-deploy disk list ceph-node4cephadmin@ceph-deploy:~/ceph-cluster$ceph-deploy disk zap ceph-node1/dev/nvme0n1cephadmin@ceph-deploy:~/ceph-cluster$ceph-deploy disk zap ceph-node2/dev/nvme0n1cephadmin@ceph-deploy:~/ceph-cluster$ceph-deploy disk zap ceph-node3/dev/nvme0n1cephadmin@ceph-deploy:~/ceph-cluster$ceph-deploy disk zap ceph-node4/dev/nvme0n1

添加固态硬盘 osd

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy --overwrite-conf osd create ceph-node1 --data/dev/nvme0n1

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy --overwrite-conf osd create ceph-node2 --data/dev/nvme0n1

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy --overwrite-conf osd create ceph-node3 --data/dev/nvme0n1

cephadmin@ceph-deploy:~/ceph-cluster$ ceph-deploy --overwrite-conf osd create ceph-node4 --data /dev/nvme0n1

创建存储池

cephadmin@ceph-deploy:~/data$ ceph osd pool create testpool 32 32

cephadmin@ceph-deploy:~/data$ ceph pg ls-by-pool testpool | awk "{print $1,$2,$15}"

修改配置

cephadmin@ceph-deploy:~/data$ vi crushmap-v1.txt

将运行图转换为文本:导出的运行图不能直接编辑,需要转换为文本格式再进行查看与编辑将文本转换为 crush 格式:cephadmin@ceph-deploy:~/data$ crushtool -c crushmap-v1.txt -o crushmap-v2查看二进制文件cephadmin@ceph-deploy:~/data$ crushtool -d crushmap-v2导入新的 crush:导入的运行图会立即覆盖原有的运行图并立即生效。cephadmin@ceph-deploy:~/data$ ceph osd setcrushmap -i ./crushmap-v2验证 crush 运行图是否生效:cephadmin@ceph-deploy:~/data$ ceph osd crush rule dump导出 cursh 运行图:cephadmin@ceph-deploy:~/data$ ceph osd getcrushmap -o ./crushmap-v3导成文本文件cephadmin@ceph-deploy:~/data$ crushtool -d crushmap-v3 > crushmap-v3.txtcephadmin@ceph-deploy:~/data$ cat crushmap-v1.txt# begin crush maptunable choose_local_tries 0tunable choose_local_fallback_tries 0tunable choose_total_tries 50tunable chooseleaf_descend_once 1tunable chooseleaf_vary_r 1tunable chooseleaf_stable 1tunable straw_calc_version 1tunable allowed_bucket_algs 54# devicesdevice 0 osd.0 class hdddevice 1 osd.1 class hdddevice 2 osd.2 class hdddevice 3 osd.3 class hdddevice 4 osd.4 class hdddevice 5 osd.5 class hdddevice 6 osd.6 class hdddevice 7 osd.7 class hdddevice 8 osd.8 class ssddevice 9 osd.9 class ssddevice 10 osd.10 class ssddevice 11 osd.11 class ssd# typestype 0 osdtype 1 hosttype 2 chassistype 3 racktype 4 rowtype 5 pdutype 6 podtype 7 roomtype 8 datacentertype 9 zonetype 10 regiontype 11 root# bucketshost ceph-node1 { id -3 # do not change unnecessarily id -4 class hdd # do not change unnecessarily id -11 class ssd # do not change unnecessarily # weight 0.045 alg straw2 hash 0 # rjenkins1 item osd.0 weight 0.030 item osd.4 weight 0.010 item osd.8 weight 0.005}host ceph-node2 { id -5 # do not change unnecessarily id -6 class hdd # do not change unnecessarily id -12 class ssd # do not change unnecessarily # weight 0.054 alg straw2 hash 0 # rjenkins1 item osd.1 weight 0.030 item osd.5 weight 0.019 item osd.9 weight 0.005}host ceph-node3 { id -7 # do not change unnecessarily id -8 class hdd # do not change unnecessarily id -13 class ssd # do not change unnecessarily # weight 0.045 alg straw2 hash 0 # rjenkins1 item osd.2 weight 0.030 item osd.6 weight 0.010 item osd.10 weight 0.005}host ceph-node4 { id -9 # do not change unnecessarily id -10 class hdd # do not change unnecessarily id -14 class ssd # do not change unnecessarily # weight 0.045 alg straw2 hash 0 # rjenkins1 item osd.3 weight 0.030 item osd.7 weight 0.010 item osd.11 weight 0.005}root default { id -1 # do not change unnecessarily id -2 class hdd # do not change unnecessarily id -15 class ssd # do not change unnecessarily # weight 0.189 alg straw2 hash 0 # rjenkins1 item ceph-node1 weight 0.045 item ceph-node2 weight 0.054 item ceph-node3 weight 0.045 item ceph-node4 weight 0.045}#my ceph nodehost ceph-hddnode1 { id -103 # do not change unnecessarily id -104 class hdd # do not change unnecessarily id -110 class ssd # do not change unnecessarily # weight 0.045 alg straw2 hash 0 # rjenkins1 item osd.0 weight 0.030 item osd.4 weight 0.010 item osd.8 weight 0.005 #这个需要去掉ssd}host ceph-hddnode2 { id -105 # do not change unnecessarily id -106 class hdd # do not change unnecessarily id -120 class ssd # do not change unnecessarily # weight 0.054 alg straw2 hash 0 # rjenkins1 item osd.1 weight 0.030 item osd.5 weight 0.019 item osd.9 weight 0.005 #这个需要去掉ssd}host ceph-hddnode3 { id -107 # do not change unnecessarily id -108 class hdd # do not change unnecessarily id -130 class ssd # do not change unnecessarily # weight 0.045 alg straw2 hash 0 # rjenkins1 item osd.2 weight 0.030 item osd.6 weight 0.010 item osd.10 weight 0.005 #这个需要去掉ssd}host ceph-hddnode4 { id -109 # do not change unnecessarily id -110 class hdd # do not change unnecessarily id -140 class ssd # do not change unnecessarily # weight 0.045 alg straw2 hash 0 # rjenkins1 item osd.3 weight 0.030 item osd.7 weight 0.010 item osd.11 weight 0.005 #这个需要去掉ssd}#my ssd node host ceph-ssdnode1 { id -203 # do not change unnecessarily id -204 class hdd # do not change unnecessarily id -205 class ssd # do not change unnecessarily # weight 0.045 alg straw2 hash 0 # rjenkins1 item osd.0 weight 0.030 #这个需要去掉hdd item osd.4 weight 0.010 #这个需要去掉hdd item osd.8 weight 0.005}host ceph-ssdnode2 { id -206 # do not change unnecessarily id -207 class hdd # do not change unnecessarily id -208 class ssd # do not change unnecessarily # weight 0.054 alg straw2 hash 0 # rjenkins1 item osd.1 weight 0.030 #这个需要去掉hdd item osd.5 weight 0.019 #这个需要去掉hdd item osd.9 weight 0.005}host ceph-ssdnode3 { id -209 # do not change unnecessarily id -210 class hdd # do not change unnecessarily id -211 class ssd # do not change unnecessarily # weight 0.045 alg straw2 hash 0 # rjenkins1 item osd.2 weight 0.030 #这个需要去掉hdd item osd.6 weight 0.010 #这个需要去掉hdd item osd.10 weight 0.005}host ceph-ssdnode4 { id -212 # do not change unnecessarily id -213 class hdd # do not change unnecessarily id -214 class ssd # do not change unnecessarily # weight 0.045 alg straw2 hash 0 # rjenkins1 item osd.3 weight 0.030 #这个需要去掉hdd item osd.7 weight 0.010 #这个需要去掉hdd item osd.11 weight 0.005}#my hdd bucketroot hdd { id -215 # do not change unnecessarily id -216 class hdd # do not change unnecessarily id -217 class ssd # do not change unnecessarily # weight 0.189 alg straw2 hash 0 # rjenkins1 item ceph-hddnode1 weight 0.045 item ceph-hddnode2 weight 0.054 item ceph-hddnode3 weight 0.045 item ceph-hddnode4 weight 0.045}#my ssd bucketroot ssd { id -218 # do not change unnecessarily id -219 class hdd # do not change unnecessarily id -220 class ssd # do not change unnecessarily # weight 0.189 alg straw2 hash 0 # rjenkins1 item ceph-ssdnode1 weight 0.045 item ceph-ssdnode2 weight 0.054 item ceph-ssdnode3 weight 0.045 item ceph-ssdnode4 weight 0.045}#my hdd rulesrule my_hdd_rule { id 88 type replicated min_size 1 max_size 12 step take hdd step chooseleaf firstn 0 type host step emit}# rulesrule my_ssd_rule { id 89 type replicated min_size 1 max_size 12 step take ssd step chooseleaf firstn 0 type host step emit}# rulesrule replicated_rule { id 0 type replicated min_size 1 max_size 12 step take default step chooseleaf firstn 0 type host step emit}rule erasure-code { id 1 type erasure min_size 3 max_size 4 step set_chooseleaf_tries 5 step set_choose_tries 100 step take default step chooseleaf indep 0 type host step emit}# end crush mapcephadmin@ceph-deploy:~/data$ crushtool -c crushmap-v1.txt -o crushmap-v2cephadmin@ceph-deploy:~/data$ crushtool -d crushmap-v2导入规则cephadmin@ceph-deploy:~/data$ ceph osd setcrushmap -i crushmap-v281

查看规则

cephadmin@ceph-deploy:~/data$ ceph osd crush dump

创建存储池

cephadmin@ceph-deploy:~/data$ ceph osd pool create my-hddpool 32 32 my_hdd_rule

验证pg 8,9,10,11 已经不在hdd 规则种没有机械盘

cephadmin@ceph-deploy:~/data$ ceph pg ls-by-pool my-hddpool | awk "{print $1,$2,$15}"PG OBJECTS ACTING18.0 0 [0,3,1]p018.1 0 [0,1,7]p018.2 0 [0,7,5]p018.3 0 [0,7,5]p018.4 0 [0,1,3]p018.5 0 [1,0,3]p118.6 0 [1,2,3]p118.7 0 [2,5,3]p218.8 0 [3,2,0]p318.9 0 [1,2,0]p118.a 0 [0,6,3]p018.b 0 [0,1,3]p018.c 0 [2,0,3]p218.d 0 [1,2,3]p118.e 0 [5,4,3]p518.f 0 [1,3,0]p118.10 0 [1,4,6]p118.11 0 [2,3,4]p218.12 0 [1,3,4]p118.13 0 [1,0,2]p118.14 0 [7,5,2]p718.15 0 [0,5,3]p018.16 0 [6,4,5]p618.17 0 [3,2,5]p318.18 0 [2,1,7]p218.19 0 [1,2,4]p118.1a 0 [3,4,1]p318.1b 0 [2,1,3]p218.1c 0 [4,3,2]p418.1d 0 [5,3,2]p518.1e 0 [5,3,0]p518.1f 0 [0,3,1]p0 * NOTE: afterwards

-

-

-

-

当前最新:ceph-3

世界速读:区块链特辑——solidity语言基础(四)

热文:华硕全球首秀四频段Wi-Fi 7路由器:峰值下载2.5万兆

滚动:惠普新款EliteBook 1040笔记本发布:13代酷睿、2K 120Hz屏

天天视点!为什么一个病毒株传着传着就没了?

Redmi K40S 12+256G顶配版不到1900元:骁龙870和OIS都有

各大新能源车企年度KPI出炉 特斯拉未达标 比亚迪称王

焦点速读:区块链特辑——solidity语言基础(二)

每日时讯!酷安最火骁龙8系手机诞生!一加11酷安热度第一

时隔两年 国美真快乐App重新更名国美

快看点丨大前端html学习06-宽高自适应

天天观察:SpringDataJPA 程序未配置乐观锁的情况下,报了乐观锁异常

Redis的客户端

每日时讯!关于19c RU补丁报错问题的分析处理

环球简讯:记录使用adb连接rn项目进行开发

焦点要闻:加油被惊喜到!一加11不杀后台:前一天打开的APP第二天还在

最新快讯!Python中高阶函数与装饰器教程

【新要闻】Python中的异常处理总结

flutter 效果实现 —— 全面屏效果

Mysql中的锁:表、MDL、意向锁、行锁

温子仁恐怖片新作《梅根》 拯救了北美院线一月票房

天天热点评!被假货逼疯的劳力士:终于坐不住了

全球快资讯:要的就是销量!特斯拉在新加坡优惠近7万

全球新资讯:豆瓣9.5高分动画!有家长炮轰《中国奇谭》画风吓哭孩子 网友不乐意了

全球今日报丨【首页】热销爆品开发修改商品值

加了国六B汽油 排气管喷水?网友犀利吐槽:我加了拉肚子

热讯:峰米S5 Rolling投影仪发布:360度可旋转支架 还能当音箱用

世界热推荐:米哈游创始人之一参与打造:国产独立游戏《微光之镜》今日发售

即时看!奥迪RS e-tron GT很好 但它仍是大众体系里最拧巴的产品

当前讯息:菜鸟将投2亿:补贴快递员爬楼送货上门

天天快看:iOS 16卡壳了

世界热推荐:OLED+彩色水墨翻转双屏!联想公布ThinkBook Plus Twist笔记本

天天微速讯:雷军爆料了!新机皇小米13 Ultra或将于MWC发布:影像堆料突破天际

焦点速看:因易增加儿童哮喘几率:美国或将禁止使用燃气灶

【环球播资讯】曾狠坑过乐视!中电熊猫被拉横幅维权:“还我血汗钱”

快消息!事件处理_2事件修饰符

flutter 基础 —— 事件监听

环球热门:JavaScript 将base64 转换为File

当前信息:129元 荣耀智能体脂秤3开售:Wi-Fi、蓝牙双连接

买699元手机送99元耳机!Redmi 12C价格跟米粉交个朋友

今晚20点抢京东大额红包 天猫年货节红包最后一天

每日动态!没了量子力学 你连手机都玩不了

焦点热讯:9.78万起 飞度堂弟新款东风本田来福酱上市:动力被砍、油耗不变

热门看点:Bonitasoft认证绕过和RCE漏洞分析及复现(CVE-2022-25237)

【世界快播报】操作系统 — 精髓与设计原理(第二章 操作系统概述)

速看:2023年手机还能怎样进化?三点方向

全球热议:干不过BBA!广汽讴歌退出中国市场:车型少 价格高

俄罗斯影院播放盗版《阿凡达2》:还是合法的!西方干瞪眼

【环球新视野】巴黎圣母院确认2024年重开!《刺客信条》花费2年还原

今日关注:接盘国服暴雪玩家!网易《无尽战区》明天开服 配置要求公布

每日热门:部分聚类算法简介及优缺点分析

时讯:记录--JS-SDK页面打开提示realAuthUrl错误

最新:5. 使用互斥量保护共享数据

环球新消息丨【Python爬虫实战项目】Python爬虫批量下载相亲网站数据并保存本地(附源码)

天天快看:什么是堆叠面积图?

热点在线丨老车主维权、新客户下单!特斯拉大降价后3天获3万辆订单

每日快讯!李斌:我们从来不把自己称作豪华品牌

当前观察:百度CreateAI开发者大会:李彦宏称2027前一线城市不再需要限购限行

当前热文:炒币亏了3个亿的美图满血复活了:全体员工发股票 搬入新大楼

过年微醺 RIO鸡尾酒大促!56元到手10瓶

软件开发入门教程网之MySQL NULL 值处理

环球关注:【首页】商品列表和单个商品组件封装

焦点报道:曝iPhone 15开始试产:采用国产灵动岛屏 京东方供货

天天看热讯:李想:理想L7小订最大用户群来自特斯拉车主

中国新能源汽车补贴13年:投入超1500亿元 覆盖317万辆车

13种Shell逻辑与算术,能写出5种算你赢!

提升代码可读性,减少if-else的几个小技巧

天天快看:携程梁建章建议:取消中考 缩短中小学学制 提前2年上班

时代变了!日系豪华败走中国:停产停售、官网504、全面退场

焦点速看:DOS初识

【天天播资讯】《春晚》收视率史低 2023年很多节目真实生活取材 这次必看

全球报道:干掉致命疾病!全球首款蜜蜂疫苗获批

转子发动机回归!马自达官宣:增程式版MX-30本月发布

环球速读:解决安卓四大不可能 李杰:一加11 16GB流畅用四年

环球快资讯:再也不怕侧方停车!现代展示e-Corner系统:还能“坦克掉头”

ElasticSearch必知必会-基础篇

环球快报:火山引擎 DataLeap 通过中国信通院测评,数据管理能力获官方认可!

全球速递!开源动物行为分析实验箱(斯金纳箱)需求调研分析

世界看点:低代码开发:释放数字化生产力

文件IO操作开发笔记(二):使用Cpp的ofstream对磁盘文件存储进行性能测试以及测试工具

百事通!成了!微信:视频号用户总使用时长接近朋友圈80%

天天要闻:开袋即食 一口弹牙 大牌优形肉肠0.99元/根(商超2.5元)

快资讯:期待!《生化危机4:重制版》威斯克或将登场

特斯拉门店:降价维权后销量翻倍!全国一天提车量超1万台

2023支付宝集五福最全攻略 神秘玩法千万别错过

琥珀银杏果是什么东西?琥珀银杏果是什么地方的菜?

兵长一米六是什么意思?兵长一米六顺口溜

美髯公指的是谁?美髯公是什么意思?

金坷垃是什么意思?金坷垃是什么时候的梗?

肥皂水是碱性还是酸性?肥皂水的主要化学成分是什么?

主角叫萧破天的小说有哪些?主角叫萧破天的全部小说

转世仁波切是什么意思?转世仁波切怎么认证?

电信拨打长途前面加什么?电信拨打长途要钱吗?

今日最新!金子塔图,自定义图表,伪漏斗图

环球今日报丨Codeforces 1704 F Colouring Game 题解 (结论,SG函数)

dnf武极觉醒技能怎么点?DNF武极觉醒技能介绍

倒角外倒角内倒角怎么区分?倒角外倒角内倒角的区别有哪些?

申怡走进云南白沙湾小学开讲公益语文课 用语文点亮希望的灯火

【新视野】专心打造小米汽车!雷军已卸任多家小米旗下公司职务

天天新资讯:《中国奇谭》爆火出圈!淘宝周边已卖断货 网友建议快出小野猪毛刷