最新要闻

- 联想小新16 2023轻薄本官宣: 普及2.5K高清大屏、1TB硬盘

- 你会答?深圳一电子厂入职考数理化、《庄子》和英语等 网友吐槽难:厂商回应

- 开眼!一宝马车高速行驶未松手刹 四个车轮全磨红了

- 众泰“亡者归来”推出首款电动车 江南U2正式开售:5.88万起

- 环球实时:广东最狂野民俗盐拖灶神刷爆网络 场面激烈:堪称我国最热血民俗

- 微资讯!深圳夜空出现三个不明发光飞行物:飞速掠过

- 今日快讯:连续开车8小时!男子长期久坐后被诊断截瘫 医生提醒

- 天天快资讯丨丰田顶级名车!世纪SUV最新效果图曝光:有“大汉兰达”那味了

- 世界热资讯!众泰汽车破产清算 一保时捷Macan将被拍卖!网友:当年皮尺部首车?

- 当前信息:注意!长期空气污染增加患抑郁症风险:甚至会致死

- 世界热点! 新型合成皮肤面世:有望解开蚊子传播致命疾病之谜

- 环球关注:20万燃油车能比?百万级轿跑底盘助力:哪吒S麋鹿测试80km/h稳过

- 【新要闻】看看你的工作会被取代吗?ChatGPT时代生存攻略:未来“高枕无忧”的10种工作

- 快看:原美团创始人王慧文进军人工智能:称将打造中国的OpenAI

- 一加Ace 2首销战报出炉:37分钟打破近一年所有安卓机首销全天记录!

- 德国最新电商周销量:AMD完胜Intel

广告

手机

iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

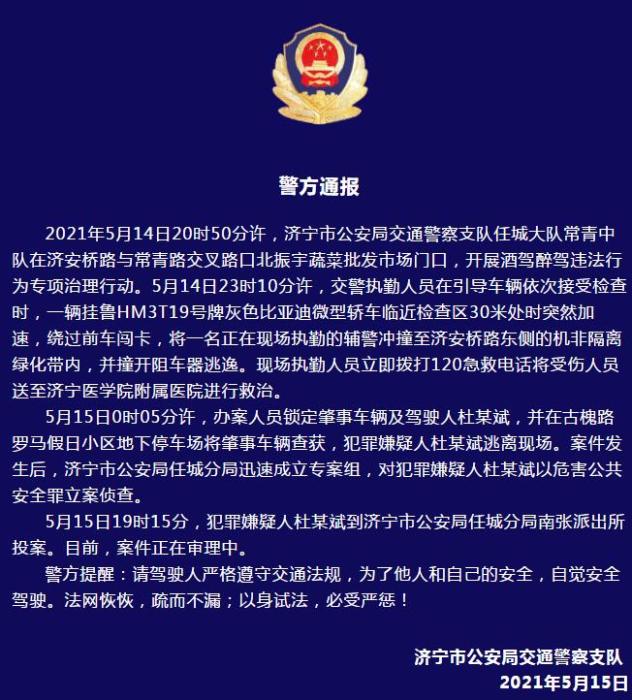

警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- iphone11大小尺寸是多少?苹果iPhone11和iPhone13的区别是什么?

- 警方通报辅警执法直播中被撞飞:犯罪嫌疑人已投案

- 男子被关545天申国赔:获赔18万多 驳回精神抚慰金

- 3天内26名本土感染者,辽宁确诊人数已超安徽

- 广西柳州一男子因纠纷杀害三人后自首

- 洱海坠机4名机组人员被批准为烈士 数千干部群众悼念

家电

全球热议:使用cnn,bpnn,lstm实现mnist数据集的分类

(资料图)

(资料图)

1.cnn

import torchimport torch.nn as nnimport torch.nn.functional as Fimport torch.optim as optimfrom torchvision import datasets, transforms# 设置随机数种子torch.manual_seed(0)# 超参数EPOCH = 1 # 训练整批数据的次数BATCH_SIZE = 50DOWNLOAD_MNIST = False # 表示还没有下载数据集,如果数据集下载好了就写False# 加载 MNIST 数据集train_dataset = datasets.MNIST( root="./mnist", train=True,#True表示是训练集 transform=transforms.ToTensor(), download=False)test_dataset = datasets.MNIST( root="./mnist", train=False,#Flase表示测试集 transform=transforms.ToTensor(), download=False)# 将数据集放入 DataLoader 中train_loader = torch.utils.data.DataLoader( dataset=train_dataset, batch_size=100,#每个批次读取的数据样本数 shuffle=True)#是否将数据打乱,在这种情况下为True,表示每次读取的数据是随机的test_loader = torch.utils.data.DataLoader(dataset=test_dataset, batch_size=100, shuffle=False)# 为了节约时间, 我们测试时只测试前2000个test_x = torch.unsqueeze(test_dataset.test_data, dim=1).type(torch.FloatTensor)[ :2000] / 255. # shape from (2000, 28, 28) to (2000, 1, 28, 28), value in range(0,1)test_y = test_dataset.test_labels[:2000]# 定义卷积神经网络模型class CNN(nn.Module): def __init__(self): super(CNN, self).__init__() self.conv1 = nn.Conv2d(#输入图像的大小为(28,28,1) in_channels=1,#当前输入特征图的个数 out_channels=32,#输出特征图的个数 kernel_size=3,#卷积核大小,在一个3*3空间里对当前输入的特征图像进行特征提取 stride=1,#步长:卷积窗口每隔一个单位滑动一次 padding=1)#如果希望卷积后大小跟原来一样,需要设置padding=(kernel_size-1)/2 #第一层结束后图像大小为(28,28,32)32是输出图像个数,28计算方法为(h-k+2p)/s+1=(28-3+2*1)/1 +1=28 self.pool = nn.MaxPool2d(kernel_size=2, stride=2)#可以缩小输入图像的尺寸,同时也可以防止过拟合 #通过池化层之后图像大小变为(14,14,32) self.conv2 = nn.Conv2d(#输入图像大小为(14,14,32) in_channels=32,#第一层的输出特征图的个数当做第二层的输入特征图的个数 out_channels=64, kernel_size=3, stride=1, padding=1)#二层卷积之后图像大小为(14,14,64) self.fc = nn.Linear(64 * 7 * 7, 10)#10表示最终输出的 # 下面定义x的传播路线 def forward(self, x): x = self.pool(F.relu(self.conv1(x)))# x先通过conv1 x = self.pool(F.relu(self.conv2(x)))# 再通过conv2 x = x.view(-1, 64 * 7 * 7) x = self.fc(x) return x# 实例化卷积神经网络模型model = CNN()# 定义损失函数和优化器criterion = nn.CrossEntropyLoss()#lr(学习率)是控制每次更新的参数的大小的超参数optimizer = torch.optim.Adam(model.parameters(), lr=0.01)# 训练模型for epoch in range(1): for i, (images, labels) in enumerate(train_loader): outputs = model(images) # 先将数据放到cnn中计算output loss = criterion(outputs, labels)# 输出和真实标签的loss,二者位置不可颠倒 optimizer.zero_grad()# 清除之前学到的梯度的参数 loss.backward() # 反向传播,计算梯度 optimizer.step()#应用梯度 if i % 50 == 0: data_all = model(test_x)#不分开写就会出现ValueError: too many values to unpack (expected 2) last_layer = data_all test_output = data_all pred_y = torch.max(test_output, 1)[1].data.numpy() accuracy = float((pred_y == test_y.data.numpy()).astype(int).sum()) / float(test_y.size(0)) print("Epoch: ", epoch, "| train loss: %.4f" % loss.data.numpy(), "| test accuracy: %.4f" % accuracy)# print 10 predictions from test datadata_all1 = model(test_x[:10])test_output = data_all1_ = data_all1pred_y = torch.max(test_output, 1)[1].data.numpy()print(pred_y, "prediction number")print(test_y[:10].numpy(), "real number")2.bpnn

import torchimport torch.nn as nnimport torch.nn.functional as Fimport torch.optim as optimfrom torchvision import datasets, transformsimport torchvisionDOWNLOAD_MNIST = False # 表示还没有下载数据集,如果数据集下载好了就写FalseBATCH_SIZE = 50LR = 0.01 # 学习率# 下载mnist手写数据集train_loader = torchvision.datasets.MNIST( root="./mnist/", # 保存或提取的位置 会放在当前文件夹中 train=True, # true说明是用于训练的数据,false说明是用于测试的数据 transform=torchvision.transforms.ToTensor(), # 转换PIL.Image or numpy.ndarray download=DOWNLOAD_MNIST, # 已经下载了就不需要下载了)test_loader = torchvision.datasets.MNIST( root="./mnist/", train=False # 表明是测试集)train_data = torch.utils.data.DataLoader(dataset=train_loader, batch_size=BATCH_SIZE, shuffle=True)# 为了节约时间, 我们测试时只测试前2000个test_x = torch.unsqueeze(test_loader.test_data, dim=1).type(torch.FloatTensor)[ :2000] / 255. # shape from (2000, 28, 28) to (2000, 1, 28, 28), value in range(0,1)test_y = test_loader.test_labels[:2000]# 定义模型class BPNN(nn.Module): def __init__(self): super(BPNN, self).__init__() self.fc1 = nn.Linear(28 * 28, 512)#定义了一个全连接层fc1,该层的输入是28 * 28个数字,输出是512个数字 self.fc2 = nn.Linear(512, 512) self.fc3 = nn.Linear(512, 10) def forward(self, x):#x是输入的图像 x = x.view(-1, 28 * 28)#将输入x的形状转换为二维,分别是batch_size和28 * 28 x = F.relu(self.fc1(x))#将x通过第1个全连接层fc1进行计算,并将结果通过ReLU激活函数处理 x = F.relu(self.fc2(x)) x = self.fc3(x) #Softmax函数是一种分类模型中常用的激活函数,它能将输入数据映射到(0,1)范围内,并且满足所有元素的和为1 return F.log_softmax(x, dim=1)#dim=1表示对每一行的数据进行运算# 初始化模型bpnn = BPNN()print(bpnn)# 定义损失函数和优化器optimizer = torch.optim.Adam(bpnn.parameters(), lr=LR) # optimize all parametersloss_func = nn.CrossEntropyLoss() # the target label is not one-hotted## criterion = nn.NLLLoss()# optimizer = optim.SGD(bpnn.parameters(), lr=0.01, momentum=0.5)# 训练模型for epoch in range(1): for step, (b_x,b_y) in enumerate(train_data): b_x = b_x.view(-1, 28, 28) # reshape x to (batch, time_step, input_size) output = bpnn(b_x) loss = loss_func(output, b_y) optimizer.zero_grad() loss.backward() optimizer.step() if step % 50 == 0: test_x = test_x.view(-1, 28, 28) test_output = bpnn(test_x) pred_y = torch.max(test_output, 1)[1].data.squeeze() acc = (pred_y == test_y).sum().float() / test_y.size(0) print("Epoch: ", epoch, "| train loss: %.4f" % loss.data.float(), "test acc: ", acc.numpy())test_output = bpnn(test_x[:10].view(-1, 28, 28))pred_y = torch.max(test_output, 1)[1].data.numpy().squeeze()print(pred_y, "prediction number")print(test_y[:10], "real number")# # 评估模型# bpnn.eval()# correct = 0# with torch.no_grad():# for data, target in test_loader:# output = bpnn(data)# pred = output.argmax(dim=1, keepdim=True)# correct += pred.eq(target.view_as(pred)).sum().item()## print("Test accuracy:", correct / len(test_loader.dataset))3.lstm

import torchfrom torch import nnimport torchvision.datasets as dsetsimport torchvision.transforms as transformsimport matplotlib.pyplot as pltimport numpy as nptorch.manual_seed(1) # reproducible# Hyper ParametersEPOCH = 1 # 训练整批数据多少次, 为了节约时间, 我们只训练一次BATCH_SIZE = 64TIME_STEP = 28 # rnn 时间步数 / 图片高度INPUT_SIZE = 28 # rnn 每步输入值 / 图片每行像素LR = 0.01 # learning rateDOWNLOAD_MNIST = False # 如果你已经下载好了mnist数据就写上 Fasle# Mnist 手写数字train_data = dsets.MNIST( root="./mnist/", # 保存或者提取位置 train=True, # this is training data transform=transforms.ToTensor(), # 转换 PIL.Image or numpy.ndarray 成 # torch.FloatTensor (C x H x W), 训练的时候 normalize 成 [0.0, 1.0] 区间 download=DOWNLOAD_MNIST, # 没下载就下载, 下载了就不用再下了)test_data = dsets.MNIST(root="./mnist/", train=False)# 批训练 50samples, 1 channel, 28x28 (50, 1, 28, 28)train_loader = torch.utils.data.DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)# 为了节约时间, 我们测试时只测试前2000个test_x = torch.unsqueeze(test_data.test_data, dim=1).type(torch.FloatTensor)[ :2000] / 255. # shape from (2000, 28, 28) to (2000, 1, 28, 28), value in range(0,1)test_y = test_data.test_labels[:2000]#LSTM默认input(seq_len,batch,feature)class Lstm(nn.Module): def __init__(self): super(Lstm, self).__init__() self.Lstm = nn.LSTM( # LSTM 效果要比 nn.RNN() 好多了 input_size=28, # 图片每行的数据像素点,输入特征的大小 hidden_size=64, # lstm模块的数量相当于bp网络影藏层神经元的个数 num_layers=1, # 隐藏层的层数 batch_first=True, # input & output 会是以 batch size 为第一维度的特征集 e.g. (batch, time_step, input_size) ) self.out = nn.Linear(64, 10) # 输出层,接入线性层 def forward(self, x): # 必须有这个方法 # x shape (batch, time_step, input_size) # r_out shape (batch, time_step, output_size)包含每个序列的输出结果 # h_n shape (n_layers, batch, hidden_size)只包含最后一个序列的输出结果,LSTM 有两个 hidden states, h_n 是分线, h_c 是主线 # h_c shape (n_layers, batch, hidden_size)只包含最后一个序列的输出结果 r_out, (h_n, h_c) = self.Lstm(x, None) # None 表示 hidden state 会用全0的 state # 当RNN运行结束时刻,(h_n, h_c)表示最后的一组hidden states,这里用不到 # 选取最后一个时间点的 r_out 输出 # 这里 r_out[:, -1, :] 的值也是 h_n 的值 out = self.out(r_out[:, -1, :]) # (batch_size, time step, input),这里time step选择最后一个时刻 # output_np = out.detach().numpy() # 可以使用numpy的sciview监视每次结果 return outLstm = Lstm()print(Lstm)optimizer = torch.optim.Adam(Lstm.parameters(), lr=LR) # optimize all parametersloss_func = nn.CrossEntropyLoss() # the target label is not one-hotted# training and testingfor epoch in range(EPOCH): for step, (x, b_y) in enumerate(train_loader): # gives batch data b_x = x.view(-1, 28, 28) # reshape x to (batch, time_step, input_size) output = Lstm(b_x) # rnn output loss = loss_func(output, b_y) # cross entropy loss optimizer.zero_grad() # clear gradients for this training step loss.backward() # backpropagation, compute gradients optimizer.step() # apply gradients # output_np = output.detach().numpy() if step % 50 == 0: test_x = test_x.view(-1, 28, 28) test_output = Lstm(test_x) pred_y = torch.max(test_output, 1)[1].data.squeeze() acc = (pred_y == test_y).sum().float() / test_y.size(0) print("Epoch: ", epoch, "| train loss: %.4f" % loss.data.float(), "test acc: ", acc.numpy())test_output = Lstm(test_x[:10].view(-1, 28, 28))pred_y = torch.max(test_output, 1)[1].data.numpy().squeeze()print(pred_y, "prediction number")print(test_y[:10], "real number")-

-

热门:ASP.Net WP 教程_编程入门自学教程_菜鸟教程-免费教程分享

教程简介ASP NETWP初学者教程-从简单和简单的步骤学习ASP NETWP,从基本到高级概念,包括概述,环境设...

来源: -

-

全球热议:使用cnn,bpnn,lstm实现mnist数据集的分类

热门:ASP.Net WP 教程_编程入门自学教程_菜鸟教程-免费教程分享

每日热文:为什么这11道JVM面试题这么重要(附答案)

环球今日讯!修改Linux内核版本信息的方法

联想小新16 2023轻薄本官宣: 普及2.5K高清大屏、1TB硬盘

你会答?深圳一电子厂入职考数理化、《庄子》和英语等 网友吐槽难:厂商回应

开眼!一宝马车高速行驶未松手刹 四个车轮全磨红了

众泰“亡者归来”推出首款电动车 江南U2正式开售:5.88万起

找数组中重复的数字

最资讯丨十年老程序员:再见了Navicat,以后多数据库管理就看这款SQL工具

【Spring】Bean装配类注解

环球实时:广东最狂野民俗盐拖灶神刷爆网络 场面激烈:堪称我国最热血民俗

微资讯!深圳夜空出现三个不明发光飞行物:飞速掠过

【全球新视野】ASP.Net 教程_编程入门自学教程_菜鸟教程-免费教程分享

今日快讯:连续开车8小时!男子长期久坐后被诊断截瘫 医生提醒

天天快资讯丨丰田顶级名车!世纪SUV最新效果图曝光:有“大汉兰达”那味了

世界热资讯!众泰汽车破产清算 一保时捷Macan将被拍卖!网友:当年皮尺部首车?

当前信息:注意!长期空气污染增加患抑郁症风险:甚至会致死

世界热点! 新型合成皮肤面世:有望解开蚊子传播致命疾病之谜

必知必会的设计原则——迪米特原则

环球关注:20万燃油车能比?百万级轿跑底盘助力:哪吒S麋鹿测试80km/h稳过

【新要闻】看看你的工作会被取代吗?ChatGPT时代生存攻略:未来“高枕无忧”的10种工作

快看:原美团创始人王慧文进军人工智能:称将打造中国的OpenAI

一加Ace 2首销战报出炉:37分钟打破近一年所有安卓机首销全天记录!

德国最新电商周销量:AMD完胜Intel

鼠标不能拖动文件是怎么回事?鼠标不能拖动文件夹怎么解决?

苹果ipad充不上电是什么原因?苹果ipad怎么使用?

YY个人积分怎么计算?yy个人积分在哪里查看?

如何选购抽油烟机?抽油烟机十大名牌排名

word打不开是什么原因?word打不开怎么解决?

固态水指的是什么?固态水冻结状态持续多久?

乌鲁木齐机场属于什么区?乌鲁木齐机场到火车站需要多长时间?

DataX二次开发——HdfsReader和HdfsWriter插件增加parquet文件读写

全链路异步,让你的 SpringCloud 性能优化10倍+

每日焦点!机器学习-SVM

金色枫叶是什么意思?金色枫叶相关的成语有哪些?

无人生还的凶手是谁?无人生还的人物介绍

逆生长是什么意思?人怎么才能逆生长?

快讯:“小行星”撞地球今日中午上演!法国、英国、荷兰均肉眼可见

十大全系标配!长安逸达一出场 就把压力给到合资了

【世界速看料】别迷信日本制造!董明珠:格力中央空调国内第一创历史 日立大金都不行

国产最帅电动猎装车成了!极氪已交付8万台:完成7.5亿美元A轮融资 杀向全球前三

黄旭东评价李培楠《星际争霸2》夺冠:这一刻等了20年!

速讯:Redmi K60官宣降价:2999元普及512GB存储、老用户保价+送手环

不花钱每天能跑12公里 印度首款太阳能汽车亮相:像是“三蹦子”

世界即时:ChatGPT惊动谷歌创始人:罕见出山检查Bard AI代码数据

热议:曝宁德时代将赴美建厂:福特出地出厂房 “宁王”出技术

当前速读:实测用微软ChatGPT写求职信:“不道德”、被拒绝

天天滚动:安全圈最新重大数据泄露事件

世界最新:移动端重排版PDF阅读器比较

Flink 积压问题排查

全球视点!重新思考 Vue 组件的定义

环球快讯:读Java实战(第二版)笔记08_默认方法

当前观点:Opera新版本集成ChatGPT:一键生成网页内容摘要

全球信息:车圈美女测2023新款比亚迪秦PLUS DM-i:1箱油跨8省1300km 油耗3.49L/100km

动态:如何在Debian 11上安装Docker Swarm集群

fusion app登录注册示例

多家快递回应站点派件延迟问题:寄送时效要看站点运力恢复如何

格芯成都晶圆厂烂尾:紫光集团要接手?

豆瓣9.2分 《中国奇谭》今日正式收官!上美影厂还有三部新作

环球视点!学习笔记——尚好房:Apache Dubbo

零跑创始人:增程式结构简单成本低 就是为纯电车加上“充电宝”

天天快看点丨减少牙菌斑 两面针牙膏4支仅需17.9元 加赠2支牙刷

【新视野】系统扭矩590N·m 零百加速6.5秒!长安UNI-V混动iDD开卖:14.49万起

《中国式家长》精神续作 《中国式相亲2》上架Steam

女子驾宝马车“刹车失灵” 罪魁祸首竟是“脚垫”

热文:1月MPV销量排名出炉 GL8、赛那、腾势D9混战 谁将胜出?

快报:1km收费2元多!日本高速公路收费政策再增50年 2115年才免费

上班注意了 北京明起恢复机动车尾号限行:尾号0、5别开车

全球即时看!沃尔沃:自家电动车供不应求 不会跟随特斯拉降价

【全球新要闻】苏联搞砸的火箭 马斯克干成了

全球微头条丨9岁209天!美国一袖珍鼠创吉尼斯纪录:人工饲养“最长寿老鼠”

德系车传统技能?因排放作弊 奔驰或被30万车主索赔

世界关注:读Java实战(第二版)笔记07_用Optional取代null

新资讯:痞子衡嵌入式:我拿到了CSDN博客专家实体证书

全球观焦点:深入解读.NET MAUI音乐播放器项目(二):播放内核

女子戴金手镯做核磁共振:手腕被烫出一圈水泡

环球短讯!三亚旅游发现拔白发服务一小时50元 网友叹服:发量不允许

【全球聚看点】多地对体育中考项目作出调整:取消/选考中考男女生长跑 800米对健康不利

全球短讯!情人节多部爱情电影集中上映:跟邓超新电影强势对垒

每日热文:ChatGPT爆火!争议声也越来越大了

焦点速递!fusion app 常用小技巧

可怕又惊喜!87岁老人棺材内复生 亲属称席都吃了:目前一切正常

全球讯息:200M内存就能启动 Win11极限精简版升级:去除广告

【独家】19岁男孩患阿尔茨海默病 专家:这么做可以远离

全球微头条丨Pytorch环境安装

全球即时:PC销量下滑 AMD的Zen2处理器重新出山:配置没法看

《分布式技术原理与算法解析》学习笔记Day08

清华教授花20多万为村民3D打印住宅:直言房子一点不贵 方式会普及

观速讯丨雷军再次力荐小米13和Redmi K60!一小米之家上午开门就卖了7台

今亮点!土耳其专家称遇上地震是命遭主持人怒斥:网友热议说的没毛病

【天天报资讯】小伙入职1小时被HR告知招错人:补偿50元

全球热门:基于ModelViewSet写接口

当前热讯:日本大飞机失败!印度砸1000亿美元买空客波音500架飞机:为何不自研?

世界观点:杨元庆评兰奇:联想登顶全球PC市场的关键先生去世了

世界热议:女子小巷停车失误一口气撞了10辆:被救时还在踩油门

环球看热讯:女子路边买鹅蛋煮后蛋清是粉色 当事人懵了:不敢吃

全球实时:6档调节 MacBook都能用:诺西笔记本/平板铝合金支架19.9元

世界播报:加倍水润 杰士邦零感爆款避孕套大促:30只19.9元

当前简讯:索尼最强旗舰!Xperia 1 V渲染图首曝